When Multimodal Models Lose Vision but Retain Language

Optimization of multimodal models to gain speed and efficiency reveals a curious asymmetry: their visual processing capabilities deteriorate much faster than their linguistic reasoning abilities. This disparity represents a critical obstacle for systems that depend on precise visual interpretation, from intelligent assistants to household robotic automations. 👁️🗨️

The Fundamental Problem of Multimodal Compression

When developers reduce the size of multimodal models to improve performance, visual understanding suffers a disproportionate impact compared to language processing. This degradation can lead to erroneous interpretations of scenes and objects, even when the linguistic component retains some analytical capacity. The practical consequence is that seemingly functional systems can make serious errors in tasks requiring precise visual perception.

Consequences of the visual-linguistic asymmetry:- Virtual assistants that misinterpret photographs and visual scenes

- Household robots struggling to recognize objects and contexts

- Automation systems failing in visually complex environments

"Degraded visual perception in smaller models can lead to erroneous interpretations even when the linguistic component maintains reasoning capacity"

Extract+Think: The Two-Stage Solution

The research presents Extract+Think, a methodology that operates through two well-defined phases. First, it trains the model to consistently extract relevant visual details according to each specific instruction. Subsequently, the system applies step-by-step reasoning over those identified visual elements to generate precise responses. This structured approach ensures that even compact models maintain a high level of visual understanding by focusing on critical aspects before analysis.

Advantages of the Extract+Think approach:- Selective extraction of relevant visual details

- Structured reasoning over identified elements

- Preservation of visual capabilities in optimized models

Practical Applications in Resource-Limited Environments

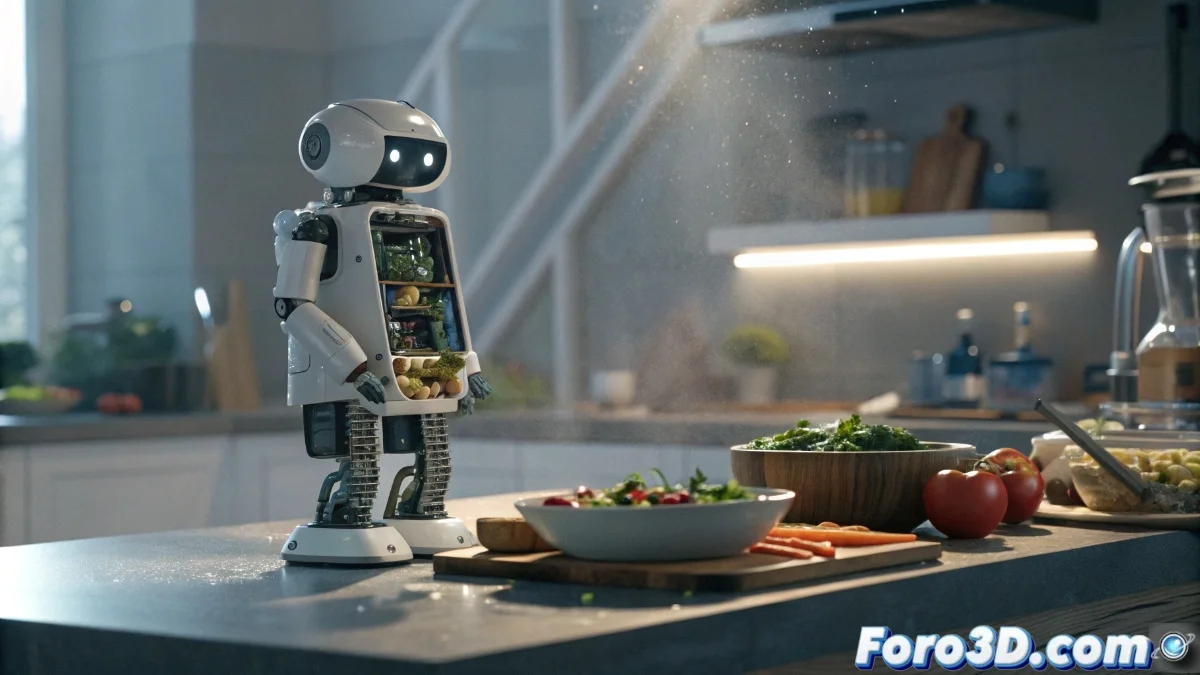

The benefits of this methodology are particularly valuable in real-world scenarios where hardware has restricted capabilities. A virtual assistant analyzing images can maintain correct understanding of scenes if it first identifies objects and important details before reasoning about them. Similarly, a household robot with limited computational resources can recognize ingredients in a kitchen and follow recipes accurately, focusing on key visual elements through this sequential extraction and reasoning process.

Use cases with limited hardware:- Mobile virtual assistants analyzing environmental photographs

- Affordable household robots interacting with everyday objects

- Embedded systems processing visual information in real time

The Paradox of Human vs. Artificial Learning

It is ironic that artificial intelligences need to learn to separate the essential from the accessory before drawing conclusions, a skill that humans develop naturally during early childhood. While children acquire this ability in kindergarten, machines require years of specialized training to reach a similar level of selective visual discernment. This paradox underscores the fundamental complexity of replicating human perception in artificial systems. 🤖