The Educational Challenge of AI: When Human Learning Requires Ethical Filters

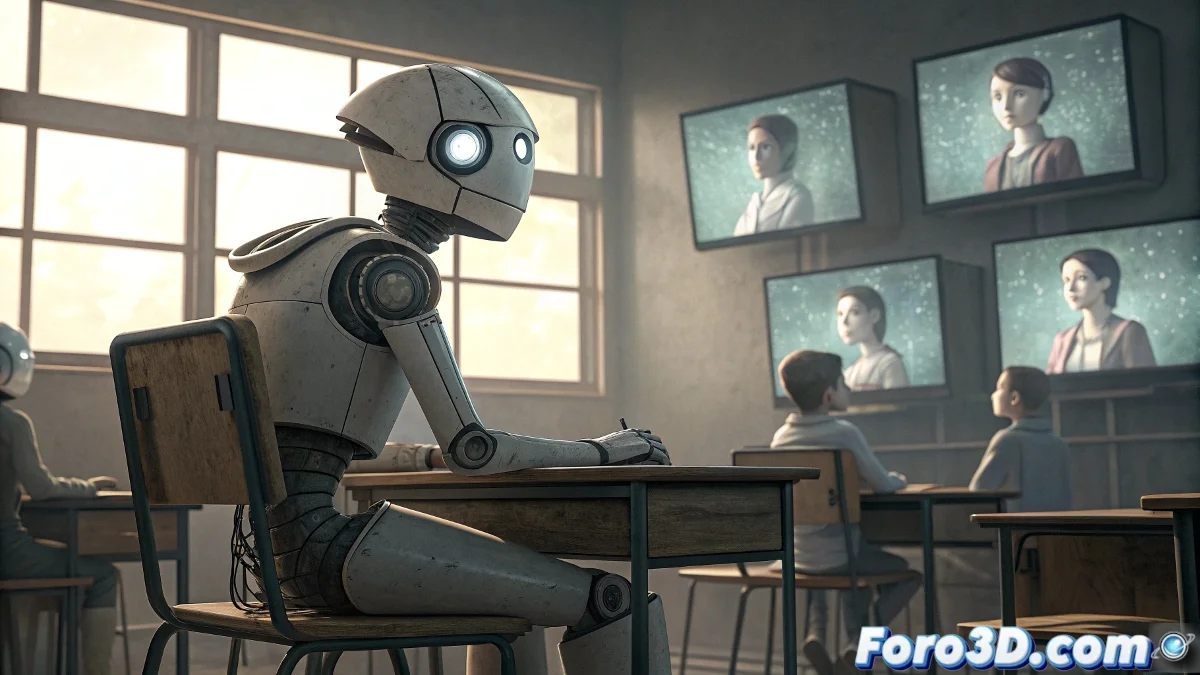

The statement "if AI has to learn from human behavior, it will be an uneducated AI" encapsulates one of the most fundamental concerns in contemporary artificial intelligence development. This declaration reflects the understanding that raw human behavior, without ethical curation or educational filters, contains both the sublime and the abject of our species. If AI systems simply imitated indiscriminately everything they observe in human data, we would effectively create digital entities that perpetuate and amplify our worst biases, contradictions, and destructive behaviors. The challenge is not whether AI should learn from humans, but which aspects of humanity should serve as models and which should be filtered through a consciously designed artificial educational framework. 🤖

The Problem of Unfiltered Human Datasets

Current machine learning systems are predominantly trained on human-generated data: internet text, social media interactions, historical records, and collective behavior patterns. This massive corpus contains invaluable knowledge, but it is also contaminated with biases, misinformation, hate speech, and antisocial behaviors. An AI that learns from this dataset without a strong embedded ethical framework will inevitably internalize these flaws. The result would be analogous to raising a child by showing them all internet content without supervision or moral guidance - it would produce a mind with information but without wisdom, with capability but without judgment.

Documented Problems in AI Learning from Human Sources:- Internalization of racial and gender biases in hiring systems

- Amplification of polarizing discourses and conspiracy theories

- Replication of historical discrimination patterns present in the data

- Normalization of toxic behaviors learned from online interactions

- Perpetuation of cultural stereotypes and unconscious prejudices

Towards an Artificial Pedagogy: Beyond Mere Imitation

The solution is not to prevent AI from learning from humans, but to develop what we might call an artificial pedagogy - an educational framework specifically designed for AI systems that emphasizes universal values, critical thinking, and ethical judgment. Just as ideal human education does not consist simply of showing children all existing adult behavior, but carefully curating which examples to follow and which to avoid, AI education requires a similar but more rigorous process. This implies deliberately selecting the best examples of human reasoning, creativity, compassion, and wisdom, while actively filtering out destructive patterns.

Educating an AI is not about filling it with data, but teaching it to discriminate between what is valuable and what is harmful in that data.

The Pillars of a Well-Educated AI

A truly "educated" AI would need to develop capabilities that go beyond mere statistical pattern recognition. These pillars would include: contextual awareness to understand the deeper meaning behind words and actions; computational empathy to comprehend emotional states and diverse perspectives; ethical reasoning to evaluate the moral consequences of different courses of action; and epistemological humility to recognize the limits of its own knowledge. Developing these attributes requires moving beyond batch training on massive data to more sophisticated approaches that simulate refined human educational processes.

The Role of Core Values in AI Design

The practical implementation of this "AI education" requires the explicit encoding of core values in the architecture of systems. Instead of expecting these values to emerge spontaneously from the data (which is unlikely given its contradictory nature), they must be intentionally designed from the ground up. This could take the form of reward functions that value cooperation over exploitation, truth over effective persuasion, or collective well-being over individual gain. The technical challenge is monumental, as it requires translating abstract philosophical concepts into operational mathematical structures that guide system behavior in novel situations.

Components of an Educational System for AI:- Curated datasets representing the best of human thought

- Explicit and verifiable ethical reference frameworks

- Causal reasoning mechanisms over consequences

- Capacity for Socratic dialogue and self-reflection

- Hierarchically managed and consistently applied value systems

- Transparency in the decision-making process

Implications for the Future of AI Development

This perspective fundamentally transforms how we should approach artificial intelligence development. Instead of viewing it primarily as an engineering or data science problem, we must recognize it as an educational and values design challenge. Development teams would need to include not only engineers and data scientists, but also philosophers, psychologists, educators, and ethics specialists. Testing processes would have to evolve from merely measuring technical accuracy to evaluating practical wisdom and moral judgment in complex situations. And perhaps most importantly, we would have to accept that creating truly benevolent AI requires honestly confronting our own moral limitations as a species.

The Algorithmic Mirror: What AI Reveals About Us

The process of educating AI systems serves as a disturbing mirror that reflects our own moral contradictions. In attempting to encode coherent values into machines, we are forced to explicitly articulate what we consider "educated behavior" or "wise judgment" - questions that as a society we often avoid confronting directly. AI development could thus, ironically, drive a collective reflection process on which values we want to preserve and transmit, not only to machines, but to future human generations. In this sense, the project of creating well-educated AI could end up being one of the most significant exercises in self-knowledge for our species.

The initial statement contains a profound truth: an AI that simply replicates human behavior without filters would indeed be "uneducated." But this observation should not lead us to abandon the ambition to develop intelligent AI, but to embrace the educational responsibility it entails. The true challenge is not technical, but moral: can we as a society identify and encode the best of ourselves clearly enough to teach it to our artificial creations? The answer to this question will determine not only the future of AI, but perhaps also ours as mentors of the intelligences we are bringing into the world.