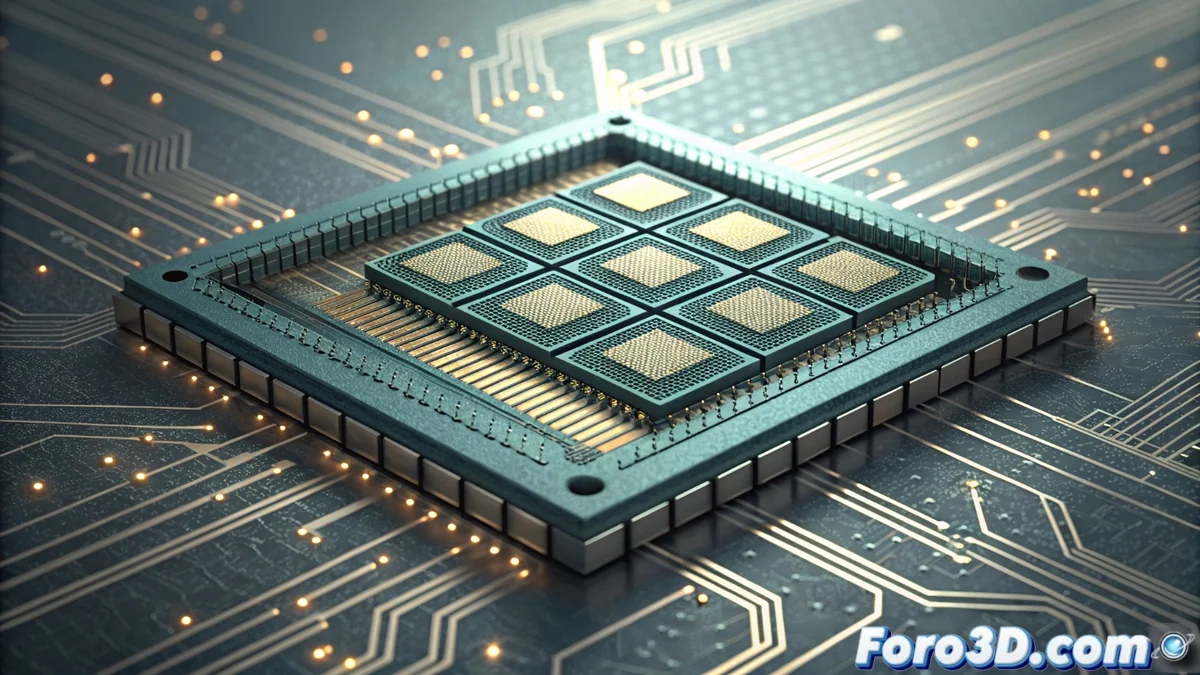

The PIM Architecture: Processing in Memory as a Hardware Revolution

Hardware design is undergoing a radical transformation with the emergence of PIM architecture (Processing in Memory). This paradigm challenges decades of convention by placing computational logic directly within memory modules, whether DDR or advanced HBM (High Bandwidth Memory) stacks. The principle is to execute operations where the data resides, eliminating the need to move massive information through the system bus to the CPU or GPU. This shift promises to resolve the most critical bottleneck in modern computing. 🚀

Transformative Advantages and Use Cases

The impact of PIM manifests in two key dimensions: raw performance and energy efficiency. By drastically reducing data movement, it consumes a fraction of the energy, a crucial advancement for data centers and supercomputing. Applications handling large datasets are the primary beneficiaries. Here, latency plummets and effective bandwidth multiplies.

Key Application Areas:- Machine Learning and AI: Accelerates model training and inference by processing data matrices directly in memory.

- Database Analysis: Speeds up complex queries and filtering and sorting operations on large volumes of information.

- Scientific Simulation and Rendering: Optimizes tasks requiring intensive access to buffers and complex geometries, common in 3D graphics and CFD.

The final irony is that, after decades optimizing CPUs to move data faster, the solution seems to be not moving it at all.

Obstacles on the Path to Adoption

Despite its potential, PIM implementation is not free from profound challenges. It requires a complete reinvention of memory hierarchies and the software that manages them. Developers need new programming models and tools to leverage this decentralized processing.

Main Challenges to Overcome:- Design Complexity: Integrating computational logic into dense memory chips poses manufacturing, heat dissipation, and reliability issues.

- Software Ecosystem: New compilers, libraries, and frameworks are needed to abstract hardware complexity for programmers.

- System Architecture: Coordination between CPU, GPU, and multiple PIM units in memory requires new interconnection and coherence designs.

The Future and Current Implementations

The industry is already advancing with prototypes and first solutions, signaling an irreversible path. Companies like Samsung with their HBM-PIM memories, and giants like AMD and Intel with architectures exploring related concepts, are leading this hybridization between memory and processing. This conceptual shift, which Von Neumann probably did not anticipate, redefines the foundations of computing, prioritizing data proximity over transport speed. The future of hardware will undoubtedly be more integrated and efficient. 💡