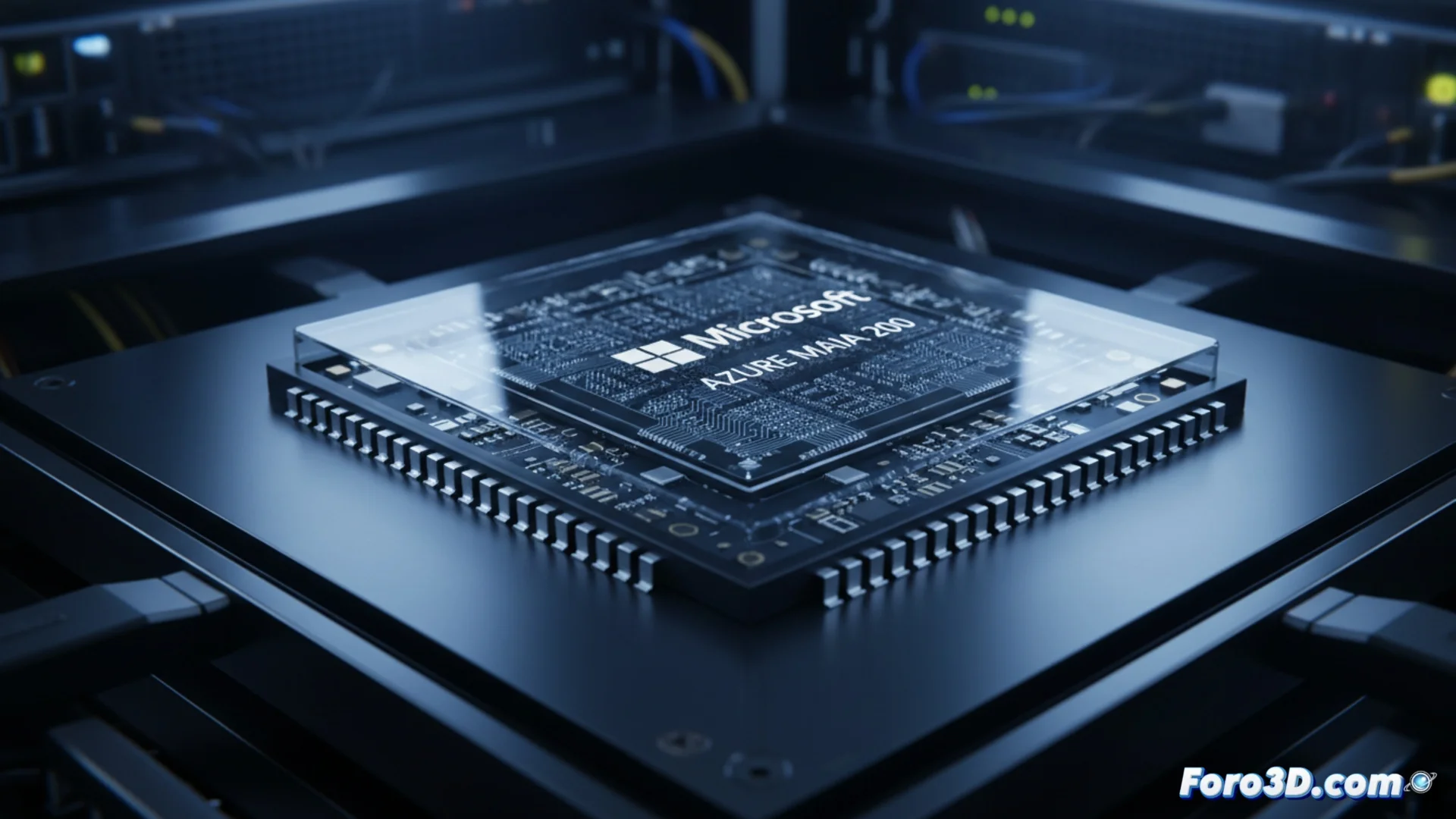

Microsoft Launches the Azure Maia 200 AI Accelerator

The technology company Microsoft has unveiled its new artificial intelligence accelerator, the Azure Maia 200. This component represents the evolution in the Maia processor family, specifically created to operate in servers. Its main task is to run already trained AI models to generate responses and predictions. Microsoft designs this hardware to handle these operations with great speed and a level of efficiency that, according to the company, surpasses what competitors like Amazon and Google currently offer in this field. 🚀

Focus on Efficiency and Speed for Inference

The Azure Maia 200 is manufactured with the purpose of optimizing how large-scale AI models are executed within the Azure cloud platform. By producing its own chip, Microsoft seeks to have more direct control over the entire hardware and software chain, which can allow for more precise adjustments in how the system handles data. This approach aims to reduce wait times and improve energy usage when running complex models, from large language models to systems that analyze images. The company does not reveal all details of its architecture, but indicates that the design prioritizes memory capacity and how chips interconnect to manage the enormous parameter sets of current models.

Key Features of the Azure Maia 200:- Designed to execute AI inference tasks at high speed.

- Aims to improve energy efficiency in data centers.

- Architecture that prioritizes memory bandwidth and interconnection.

It seems that the new global competition is not fought with missiles, but with transistors and who can process a query about kittens faster.

The Competitive Landscape of AI Silicon

The launch of the Maia 200 highlights a broad trend in the technology sector, where major cloud service providers develop their own specialized chips. Amazon has its Inferentia and Trainium processors, while Google operates with its Tensor Processing Units (TPU). By introducing the Maia 200, Microsoft solidifies its plan to offer a distinct AI infrastructure, competing not only in software services but also in the underlying performance of the hardware. The company plans to deploy these accelerators in its data centers worldwide to power services like Azure OpenAI Service and other generative AI operations.

Main Players in Custom Cloud Chips:- Microsoft: Azure Maia 200 for inference.

- Amazon Web Services: Inferentia and Trainium chips.

- Google Cloud: Tensor Processing Units (TPU).

Implications for the Future of Cloud Computing

This move underscores how the race for AI supremacy now critically depends on custom silicon. By controlling both the software and hardware, Microsoft aims to offer a more integrated and efficient stack for its customers. The ultimate goal is to process larger and more complex artificial intelligence models faster and at a lower operational cost, defining new performance standards in the cloud. 🔌