Adaptation of LiDAR Models through Visual Knowledge Distillation

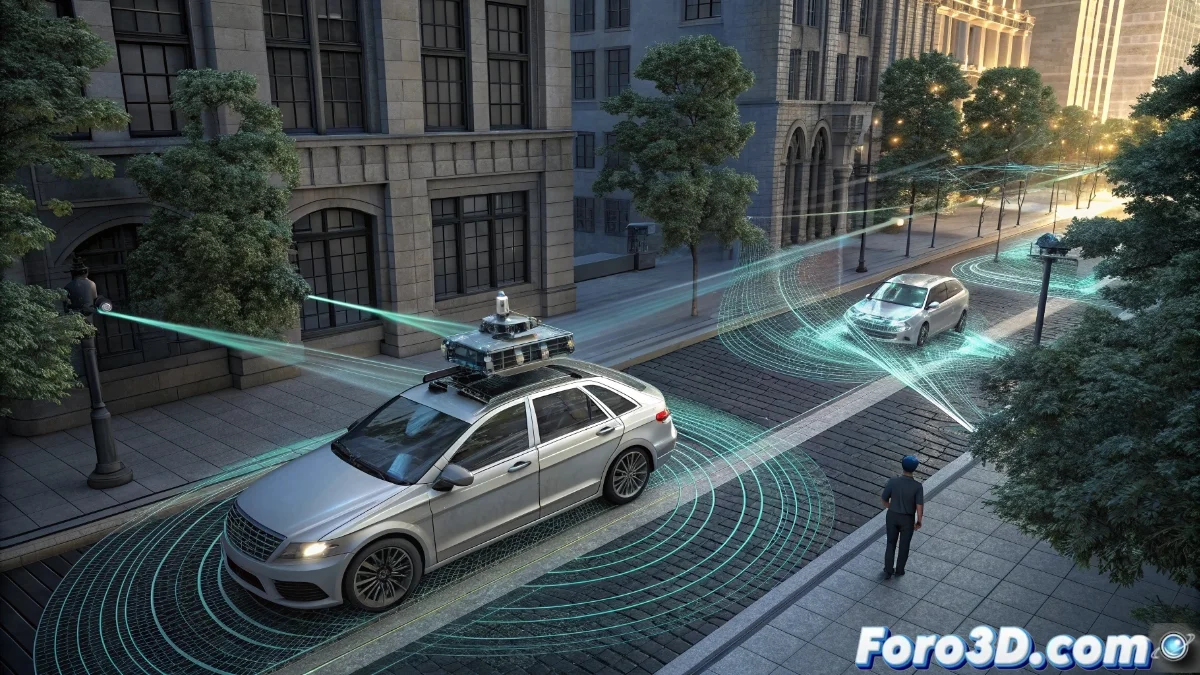

Interoperability between heterogeneous LiDAR sensors represents one of the most complex obstacles in the development of robust autonomous systems. When a model trained with data from a specific sensor faces variations in resolution, scanning patterns, or noise levels, its semantic segmentation capability deteriorates dramatically 🎯.

Fundamentals of Multimodal Transfer

Vision Foundation Models emerge as a paradigmatic solution through unsupervised distillation processes that transform visual representations into transferable knowledge to LiDAR domains. This methodology leverages the intrinsic stability of image models to generate rich teaching signals that guide the LiDAR model's learning without requiring manual annotations in the new sensor domain 🔄.

Key mechanisms of cross-distillation:- The visual model acts as a generative teacher producing representations invariant to sensor variations

- The LiDAR student learns to emulate these representations during extensive pretraining with unlabeled data

- A shared latent space is established that facilitates subsequent adaptation between different sensor configurations

Multimodal distillation creates cognitive bridges between visual and LiDAR domains, replicating the human ability to transfer knowledge between different sensory contexts

Architecture for Scalable Generalization

The choice of the LiDAR backbone critically determines the effectiveness of the transfer process. Certain neural topologies demonstrate greater aptitude for absorbing and preserving stable features from visual foundation models. The proposed methodology enables single pretraining of the backbone through distillation, allowing its reuse in multiple domain shift scenarios without repeating the full process 🏗️.

Generalization preservation strategies:- Maintenance of the frozen backbone during the final adaptation phase to the new sensor

- Exclusive training of lightweight MLP heads for specific segmentation tasks

- Extraction of robust features that resist variations in density and scanning patterns

Experimental Validation and Practical Applications

This approach has demonstrated consistent superiority over conventional methods in four particularly challenging benchmark scenarios, including transitions between LiDARs of different densities and scanning configurations. In real implementations such as the migration of autonomous vehicles from 64-line rotating systems to 32-line configurations, the pretrained backbone extracts resilient features against density reductions, while the MLP head quickly learns to map these representations to specific semantic classes 🚗.

The synergistic combination of image-LiDAR distillation, reusable backbone, and lightweight adaptation heads constitutes an efficient and scalable paradigm to address generalization challenges in robotic perception. This advance represents a fundamental conceptual shift: LiDAR systems are finally learning that changing tools does not imply relearning from scratch, but intelligently adapting to new operational conditions 💡.