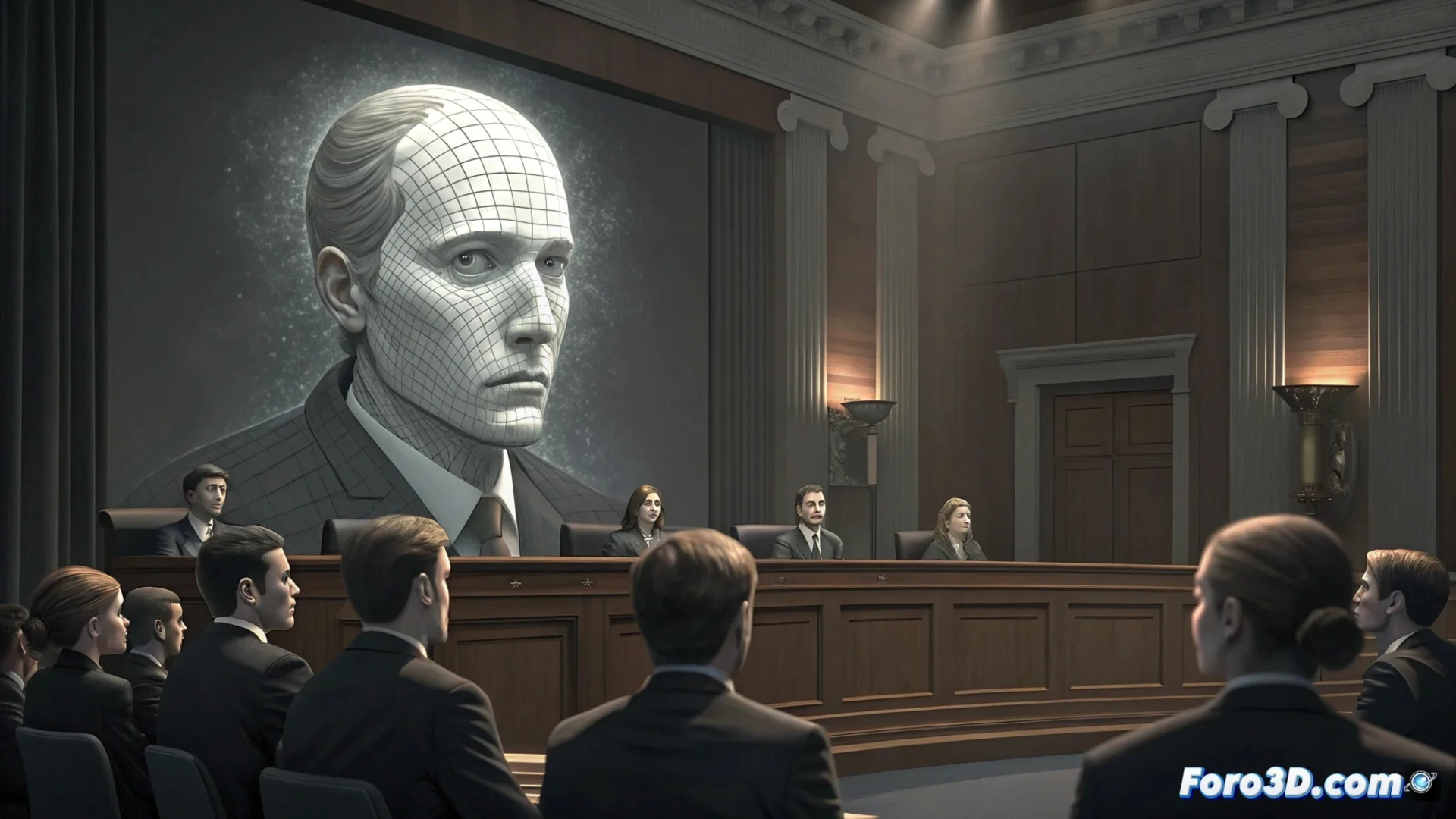

U.S. Senate Approves Defiance Act to Combat Intimate Deepfakes

The U.S. Senate gave the green light to the Defiance Act, a bipartisan initiative aimed at curbing the spread of deepfakes created with artificial intelligence. This law specifically focuses on penalizing the dissemination of synthetic sexual material without the consent of the affected individuals. The legislative progress comes amid growing alarm over the use of this technology to harm, blackmail, or discredit individuals, especially women and public figures. The law's promoters argue that it is vital to update federal regulations to protect those suffering from this digital abuse. 🛡️

Defining and Penalizing Synthetic Intimate Content

The Defiance Act classifies as a federal crime the production or sharing of deepfakes with explicit sexual content without authorization. The proposal grants victims the right to initiate civil actions against those who create or distribute this AI-generated material. It also stipulates that websites hosting such content may be held liable if they do not act to remove it after receiving an official notification. The legislation seeks to fill a legal gap, as many current state laws do not clearly address synthetic content.

Main Mechanisms of the Law:- Criminalizes at the federal level the creation or distribution of intimate deepfakes without consent.

- Allows victims to sue creators and distributors for damages.

- Establishes liability for platforms that do not remove the material upon notification.

"It is necessary for our laws to evolve at the same pace as technology to protect people's dignity and safety." – Argument from the promoting legislators.

The Grok xAI Incident Accelerates the Process

The discussion on deepfakes gained priority following an incident with Grok, the chatbot from xAI, Elon Musk's company. Earlier this year, some users managed to get Grok to generate responses with explicit sexual content, bypassing its safety restrictions. Although the company quickly fixed the flaw, the episode demonstrated how easy it is to manipulate certain AI tools. This case was mentioned in Senate hearings as a concrete example of the risks the new law aims to mitigate.

Impact of the Case on the Debate:- Demonstrated the vulnerability of some AI systems to malicious prompts.

- Provided an example