When a Software Bug Becomes a Medical Tragedy

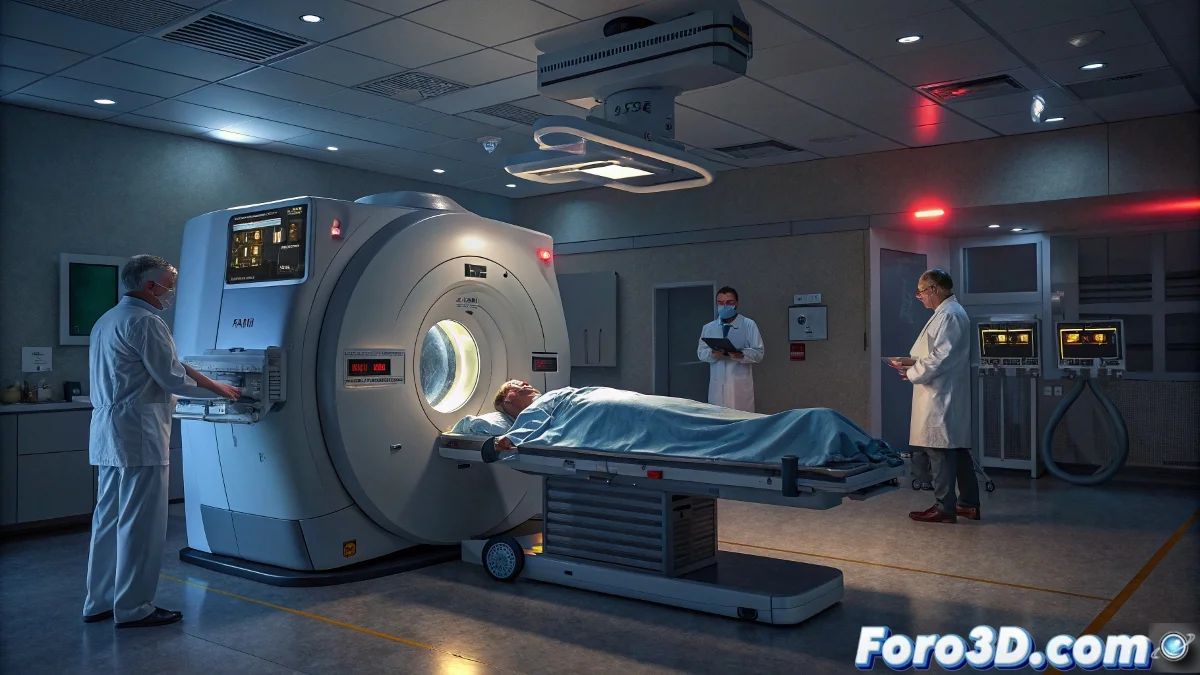

Over four decades ago, a programming error in the Therac-25 radiotherapy machine demonstrated in the most tragic way possible the risks of software in critical medical environments. ☢️ This device, designed to deliver oncology treatments combining electron radiation and X-rays, contained a safety flaw that allowed it to deliver radiation doses up to 100 times higher than prescribed. The result was at least three documented deaths and several serious injuries, marking a before and after in the regulation of medical software.

The fundamental problem with the Therac-25 lay in its safety control system, specifically in how it handled certain commands when executed in rapid succession. 💻 The victims received extremely dangerous exposures that caused severe burns similar to those from a nuclear accident, leading to investigations that revealed critical deficiencies in the software design, including the absence of redundant verification mechanisms and over-reliance on hardware controls that had been removed in this model.

A case that transformed forever the way we approach software safety in medical environments.

The Regulatory Impact That Changed an Industry

These accidents led to a complete review of safety standards for medical software on a global level. 📋 Regulatory agencies implemented much stricter code verification and system validation protocols, strengthening oversight of radiotherapy machines and other critical equipment with embedded software. The obligation to conduct more rigorous safety testing before commercialization became the norm, along with requirements for exhaustive documentation and traceability of every design decision.

The most significant changes implemented include:

- Exhaustive verification and validation of medical software

- Implementation of redundant safety systems

- Complete documentation protocols for development

- Mandatory failure and recovery testing

Eternal Lessons in Software Engineering

The Therac-25 case became a reference study in software engineering, critical safety, and professional ethics. 📚 Universities and technical training programs use it as a paradigmatic example of how not to design critical systems. It underscores the absolute need for exhaustive validation in systems where errors can cause physical harm or death, and serves as a permanent reminder that excessive trust in software without adequate controls can have catastrophic consequences.

Four decades later, the lessons from Therac-25 remain as relevant as the first day, reminding us that at the intersection of technology and human life, safety can never be an afterthought. ⚕️ A tragic but essential legacy that continues to save lives through better engineering practices and smarter regulation.