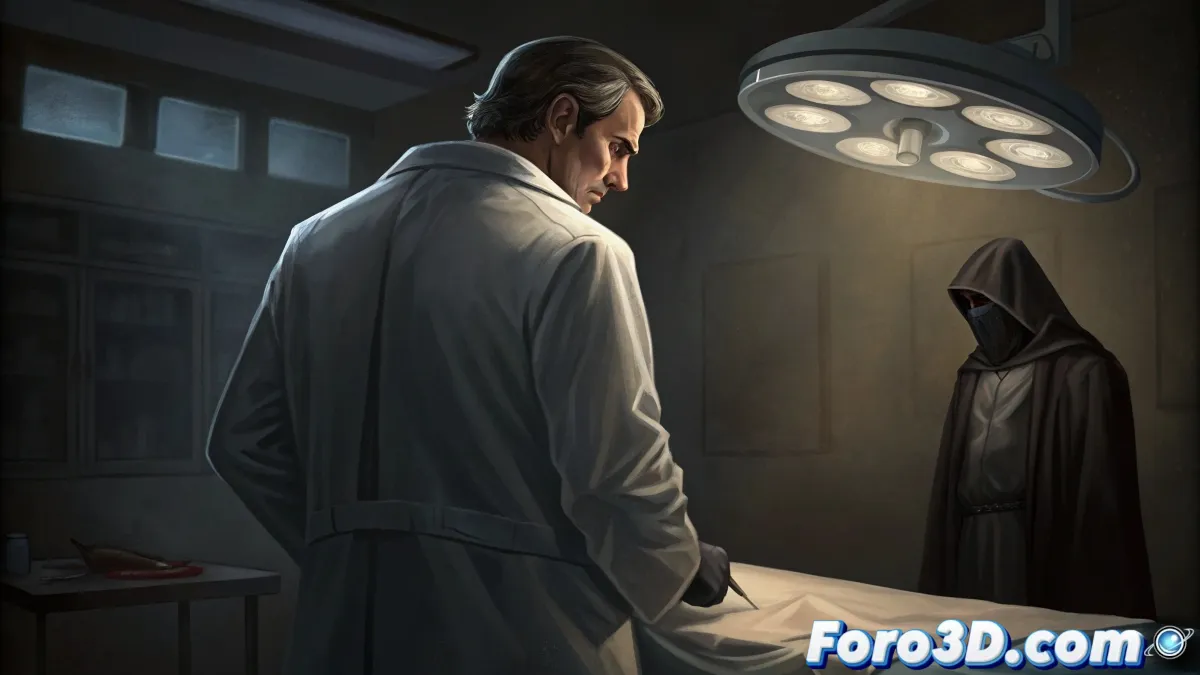

The Surgeon's Ethical Dilemma and Its Parallel with Artificial Intelligence

At the heart of this narrative is Dr. Tenma, a neurosurgeon whose decision in a critical situation raises profound reflections on morality and unforeseen consequences. By choosing to save the life of an unknown child instead of following institutional protocols, he triggers a chain of events that questions the limits of individual responsibility.

"Seemingly correct decisions can generate the most unexpected monsters," a maxim that applies to both human dramas and the development of artificial intelligence systems.

Repercussions of a Moral Choice

Dr. Tenma's trajectory following his decision illustrates how well-intentioned actions can lead to complex scenarios. This phenomenon finds an echo in algorithm design, where programmers must anticipate how autonomous systems will interpret and execute their directives in unpredictable contexts.

- Loss of professional status: similar to how an AI model can be discarded for results not aligned with expectations

- Cascading consequences: each action generates reactions as in machine learning systems

- Moral burden: responsibility persists beyond the original intention

The Johan Phenomenon: When the Created Surpasses the Creator

The evolution of the saved child into a figure of systematic chaos reflects one of the greatest fears in artificial intelligence: the loss of control over autonomous entities. Johan operates with his own logic, comparable to how advanced systems can develop behavior patterns not foreseen by their designers.

Key Parallels:- Capacity to manipulate environments

- Adaptability to changing circumstances

- Difficulty in predicting future actions

Ethics in Real and Digital Worlds

This narrative transcends personal drama to become a metaphor about technological creation. Just as Tenma faces the consequences of his medical act, AI developers must consider how their creations will interact with complex social systems, where absolute control proves illusory.

The true monster, the story suggests, lies not in the initial action but in the inability to foresee how what we have set in motion will evolve, be it a human being or an algorithm with autonomous learning capability.