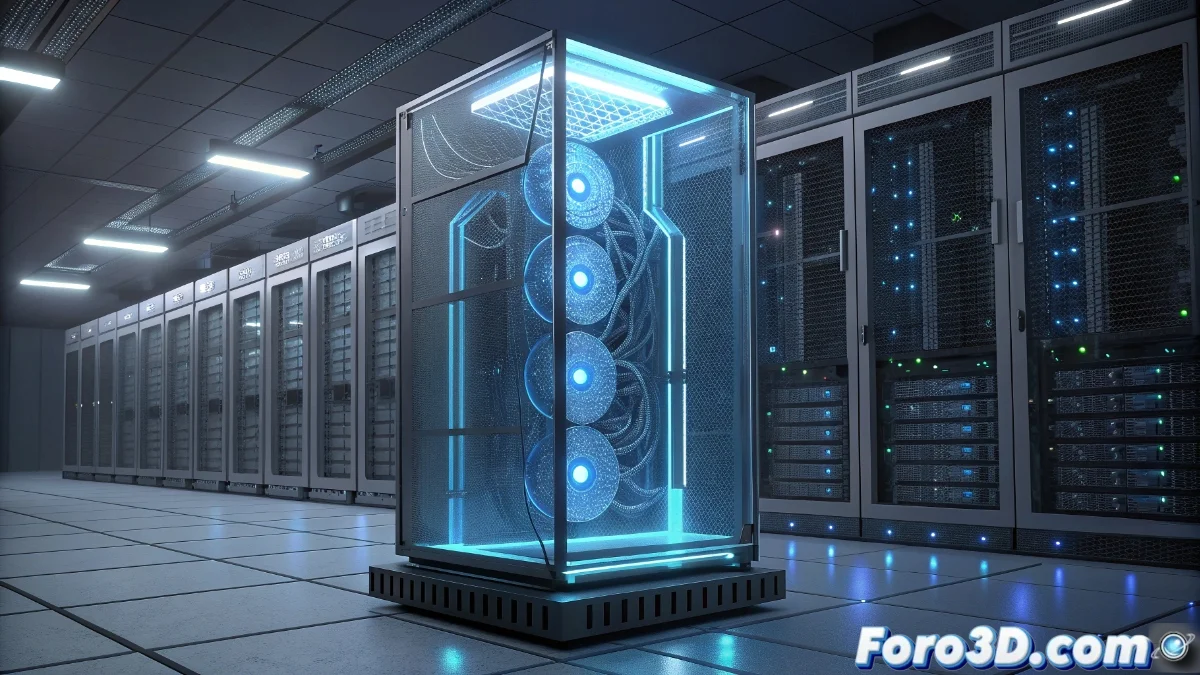

The NVIDIA DGX A100 Server: Comprehensive Power for Artificial Intelligence

The NVIDIA DGX A100 dedicated server constitutes a complete solution specifically designed for massive training and deployment of artificial intelligence systems. This platform integrates eight A100 graphics processing units connected via NVLink technology, creating an unprecedented parallel computing ecosystem. 🚀

Advanced Computing Architecture

The NVLink interconnection between the eight A100 GPUs establishes a unified memory domain that revolutionizes data processing. This configuration completely eliminates traditional bottlenecks in information transfer, allowing the most complex deep learning models to be trained in significantly reduced times.

Key system components:- Eight NVIDIA A100 GPUs with high-speed NVLink interconnection

- High-performance AMD EPYC processor for centralized management

- 1 Terabyte of RAM for handling massive datasets

- NVMe storage for ultra-fast transfers

- Advanced cooling system for maximum energy efficiency

- Native optimization for TensorFlow, PyTorch, and other frameworks

The inherent distributed processing capability of the design facilitates the handling of datasets exceeding terabytes, maintaining optimal energy efficiency through state-of-the-art cooling technology.

Applications in Professional Environments

This system is specifically oriented towards advanced research projects where the scale of models or the volume of data becomes unmanageable for conventional infrastructures. Universities and technology corporations use the DGX A100 to develop natural language systems, computer vision for autonomous vehicles, and complex scientific simulations.

Main use cases:- Development of industrial-scale natural language models

- Computer vision systems for autonomous cars

- Scientific simulations and advanced research

- Execution of multiple workloads through virtualization

- Reduction of latency in real-time inference processes

- Improvement of throughput in massive batch processing

Practical Implementation Considerations

GPU virtualization allows executing multiple workloads simultaneously, turning this platform into a versatile solution for both development and production environments. It is crucial to consider that when all GPUs operate at maximum capacity, the power consumption requires specialized electrical infrastructure, comparable to the consumption of medium-scale industrial facilities. ⚡