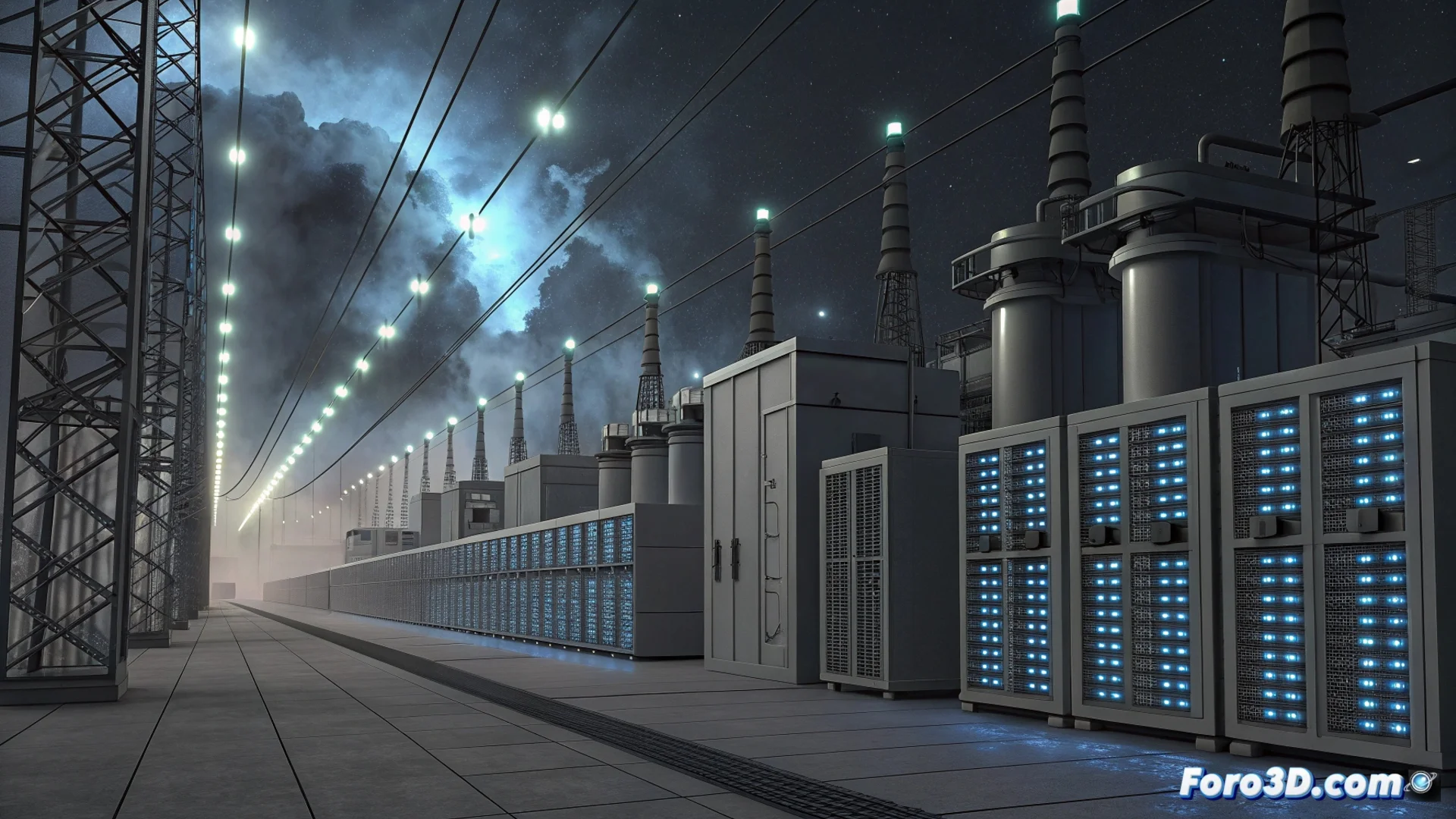

The Energy Demand of AI Data Centers Continues to Grow

The race to build specialized infrastructure for artificial intelligence continues unabated. This constant expansion exerts an ever-increasing pressure on electricity supply networks, a phenomenon particularly evident in the United States. A recent analysis quantifies this impact with concrete figures, projecting a future where energy consumption will multiply. ⚡

Concrete Projections on Future Consumption

A BloombergNEF report, published in December, offers one of the most detailed estimates to date. The study projects that the energy demand of these centers could scale up to reach 106 gigawatts by 2035. This figure represents a very significant increase compared to current capacity and underscores the energy intensity required to train and run advanced AI models. It equates to supplying electricity to tens of millions of homes.

Key Factors Driving This Consumption:- The race to develop and train large language models and other complex AI applications.

- The need to process massive amounts of data continuously.

- The critical requirement to cool high-performance servers, which further aggravates total consumption.

The exponential growth of AI infrastructure is directly linked to a parallel increase in electricity demand.

Strategies for Sustainable Development

This scenario forces the technology industry and regulators to seek solutions that balance innovation with sustainability. Various avenues are being actively explored to mitigate the impact and avoid overloading the grid.

Solutions in Development:- Improve the efficiency of chips and specialized AI processors.

- Implement more advanced and lower-consumption cooling systems.

- Integrate renewable energy sources, such as solar or wind, directly into operations.

A Recursive Future for Energy

Some companies are already planning to build data centers near solar or wind farms to ensure a greener supply. While some express concern about the environmental impact, others envision a future where artificial intelligence itself could optimize its energy consumption, creating a recursive cycle where AI manages the energy that AI needs. The ultimate goal is clear: sustain the pace of innovation without compromising the stability of electrical grids. 🔄