Sound Classes in Unreal Engine: Hierarchical Audio Management

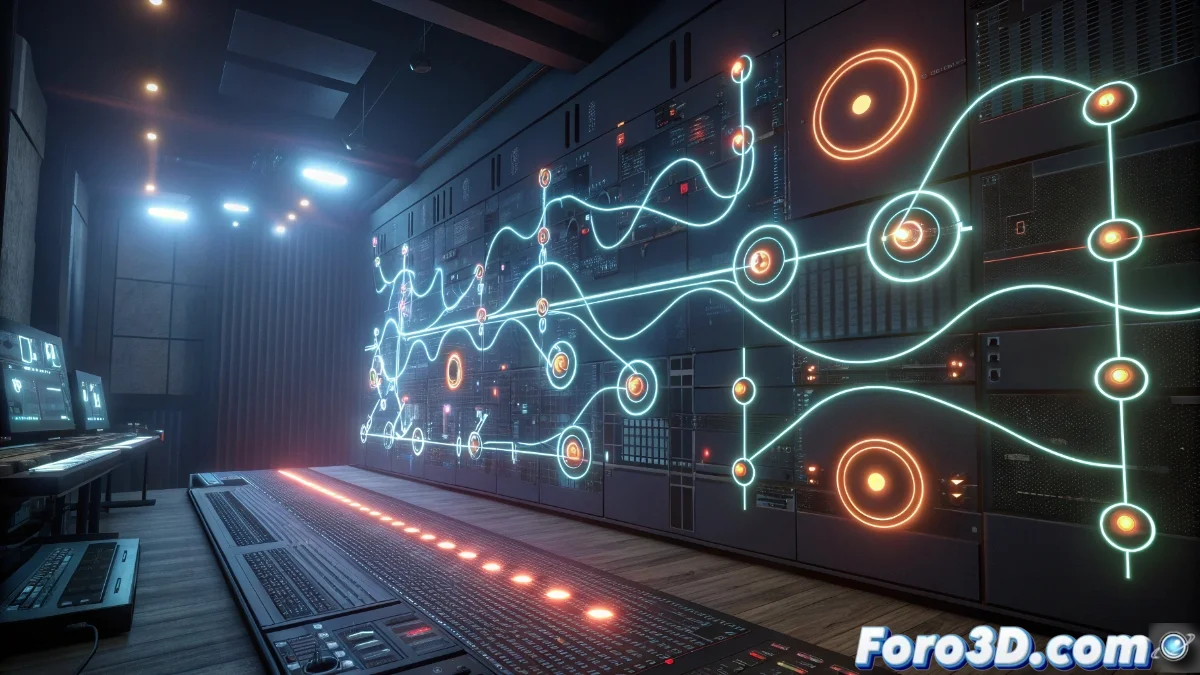

The Unreal Engine implements the Sound Class system to manage audio hierarchically in real time, allowing sound effects to be grouped with shared attributes and global settings such as volume or pitch to be modified without needing to alter each sound file individually. 🎵

Configuration and Structure of Sound Classes

Configuration begins with the creation of Sound Classes in the Content Browser, where essential properties such as attenuation, priority, and pitch modulation are established. These classes are arranged in a parent-child hierarchy, inheriting configurations from higher levels for detailed and consistent control.

Key elements in the hierarchy:- Main Master class that acts as the root of the audio tree

- Specialized subclasses like Music, SFX, or Dialogue with category-specific settings

- Inherited properties that ensure uniformity in the project's auditory experience

Organization into Sound Classes greatly simplifies mixing and mastering, centralizing controls that impact multiple sound elements simultaneously.

Practical Applications and Performance Optimization

In real-world scenarios, Sound Classes enable features like selective muting of sounds, dynamic adjustments based on gameplay events, and efficient resource management to avoid acoustic saturation. Developers can manipulate them via Blueprints or C++ during runtime, adapting the audio to the game's circumstances.

Optimization advantages:- Active control of the number of sounds played simultaneously

- Reduction of CPU usage on platforms with limited resources

- Improvement of overall smoothness by managing priorities and attenuations

Final Reflections on Audio Management

Organizing numerous Sound Classes may evoke the desire for real-life volume controls in certain situations, but in Unreal Engine, you have the power to mute with a click what you don't want to hear. This system not only streamlines the workflow but also ensures a polished and adaptable auditory experience. 🎧