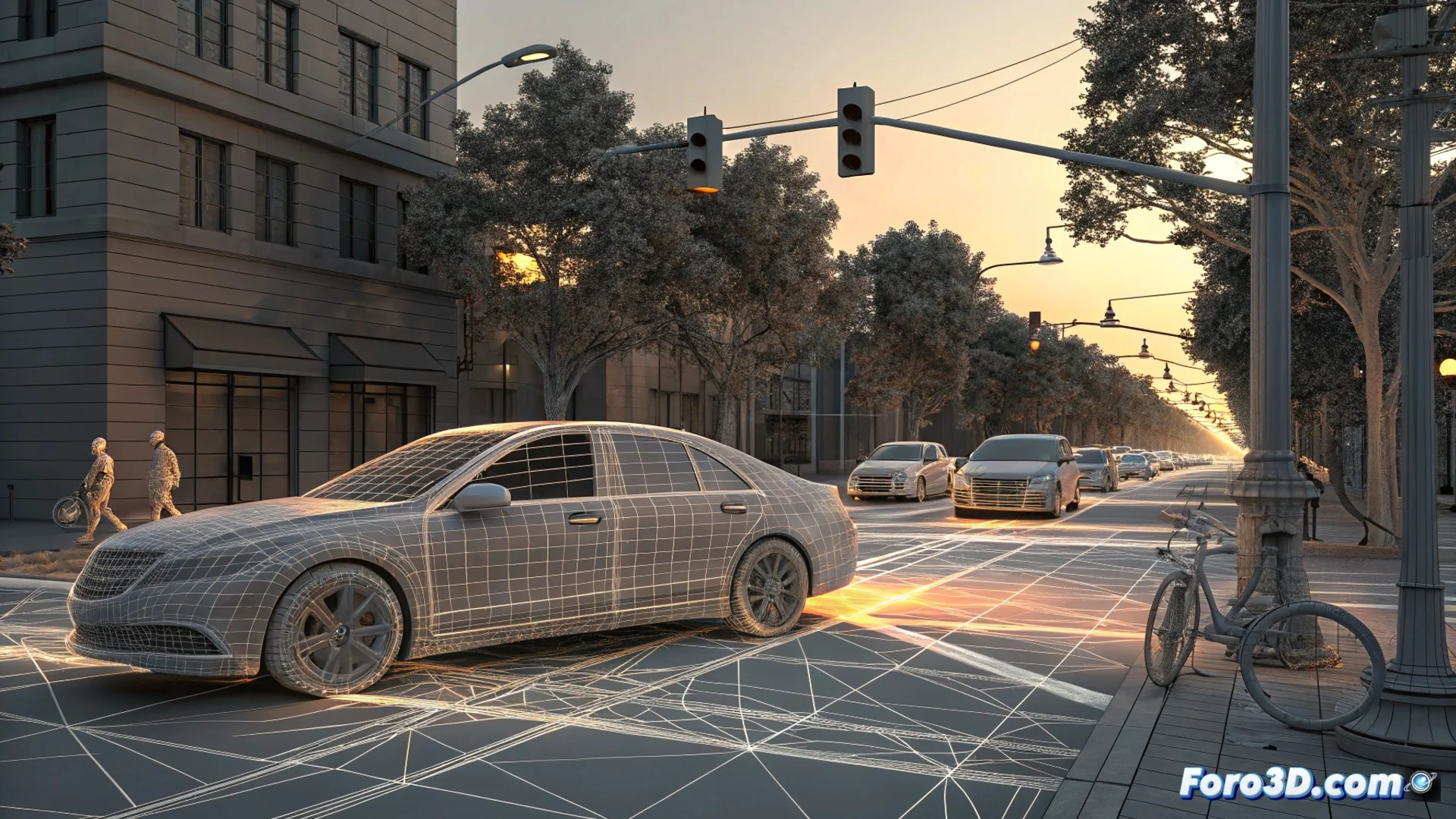

Reconstructing Accidents with Autonomous Vehicles Requires Modeling the Environment in 3D

When a self-driving vehicle is involved in an incident, investigators don't just review the recordings. Their core work is to recreate the physical world digitally with absolute precision. This three-dimensional forensic reconstruction is the cornerstone for understanding what really happened. 🕵️♂️

The LIDAR Point Cloud: The Digital Mold of the Real World

The process begins with the data captured by the vehicle's LIDAR sensors. These devices emit rapid laser light pulses and calculate the time it takes for them to bounce back. The result is a massive and detailed 3D point cloud that defines the space. Each point represents a precise coordinate, mapping from the asphalt and traffic signs to other cars and people. This data collection is the essential raw material; without it, any subsequent analysis lacks an objective foundation.

Key elements captured by the point cloud:- Road geometry: Curves, slopes, lanes, and road edges.

- Static objects: Poles, traffic lights, barriers, and nearby buildings.

- Dynamic elements: The position, shape, and movement of other vehicles, cyclists, or pedestrians at the time of the event.

The key is that the 3D model is an exact replica, without assumptions or interpolations that alter the facts.

Simulating the Autonomous System's Perception and Decisions

With the environment already modeled, experts can reproduce the situation. They transform the raw point cloud into a virtual environment that the vehicle's control unit software can interpret again. In this simulation, all conditions are replicated: visibility, the exact position of each object, and data from all sensors (not just LIDAR). The goal is clear: see what the system saw and understand why it acted as it did. It analyzes how it classified an obstacle, whether it correctly predicted its trajectory, and what logic it followed to execute or not execute an evasive maneuver.

Simulation analysis phases:- Recreate the interpretable environment: Convert raw data into a 3D mesh or virtual scene that the driving algorithm can process.

- Reproduce the sequence of events: Run the simulation with the same time and sensor parameters as the original vehicle.

- Isol