The Evolution of Hardware for Artificial Intelligence

The technology sector is undergoing constant transformation in the field of advanced computing. The main processor manufacturers are competing to offer increasingly powerful solutions for the challenges of machine learning and language models.

"Innovation in processing architectures is advancing faster than ever", note high-performance computing experts.

Performance Testing in AI Systems

Current benchmarks evaluate multiple aspects of neural processing:

- Inference Speed: ability to generate responses in real time

- Energy Efficiency: power consumption per operation

- Scalability: performance in multi-GPU configurations

Trends in Specialized Processing

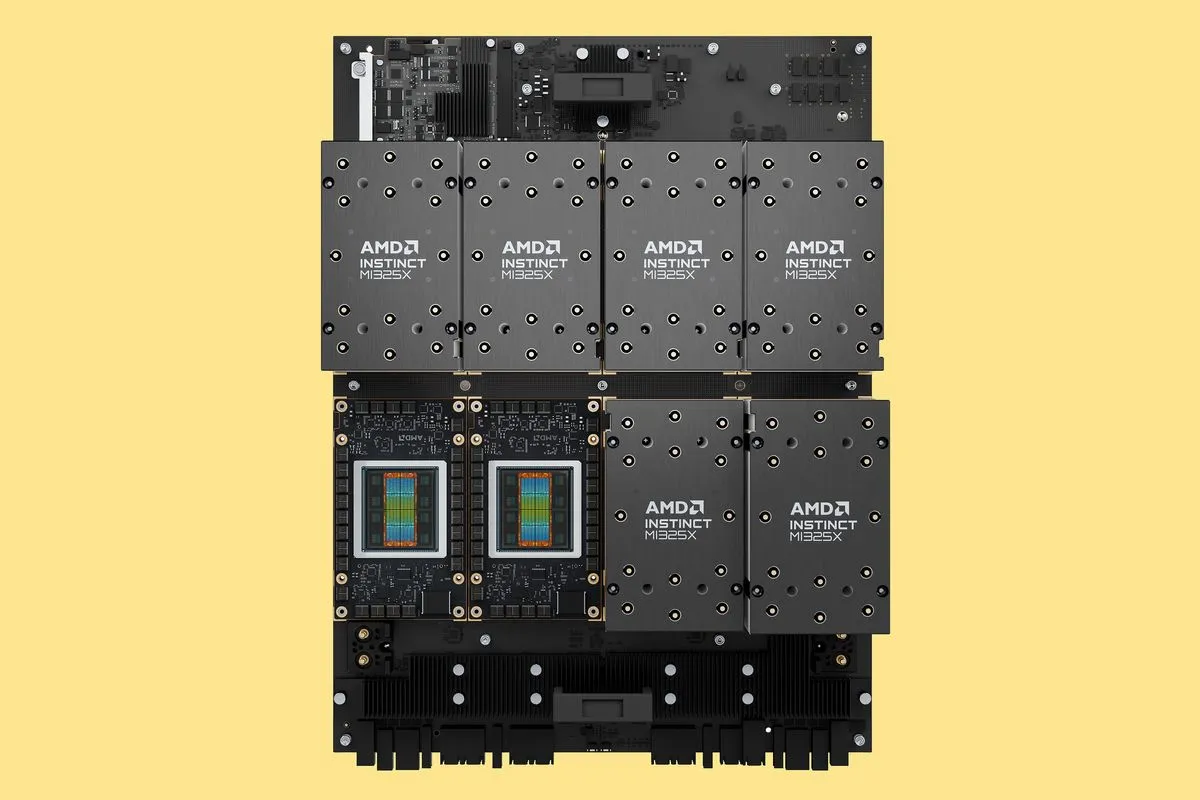

The latest generations of graphics accelerators incorporate innovative features:

- Ultra-high bandwidth memory

- Adaptive precision calculation units

- Low-latency chip-to-chip interconnects

The Current Competitive Landscape

While some companies lead the development of specific hardware for AI, others focus their efforts on optimizing traditional architectures. This diversity of approaches benefits the sector as a whole, driving improvements on all fronts.

Recent advances allow processing models with trillions of parameters, something unthinkable just five years ago. This capability opens new possibilities in scientific research, drug development, and natural language understanding.

Future Challenges

The main current technical challenge consists of:

- Reducing energy consumption

- Improving efficiency in complex tasks

- Simplifying the implementation of distributed systems

The next generation of processors promises significant advances in these areas, although the exact pace of innovation remains a matter of debate among industry analysts.