MV-TAP Improves Point Tracking in Multiview Video

A team of researchers has developed MV-TAP, an innovative system that tracks points in video sequences captured from multiple cameras. This method integrates information from all views to build more complete and occlusion-resistant trajectories, setting a new standard in the field. 🎯

An Approach that Crosses Data Between Cameras

The system works by simultaneously analyzing the sequences from all available cameras. Its core is a multiview attention mechanism that identifies and correlates points of interest across different spatial and temporal planes. This allows tracking elements even when they are partially occluded in one view or moving in complex dynamic scenes. The integration of camera geometry further refines the accuracy of the trajectories it calculates.

Key Features of the System:- Processes cross-information between multiple camera views simultaneously.

- Combines an attention mechanism with spatio-temporal data and camera geometry.

- The researchers trained and evaluated it with a large synthetic dataset and several specific real-world test sets.

The results demonstrate that MV-TAP outperforms existing tracking methods and sets a new benchmark in this field.

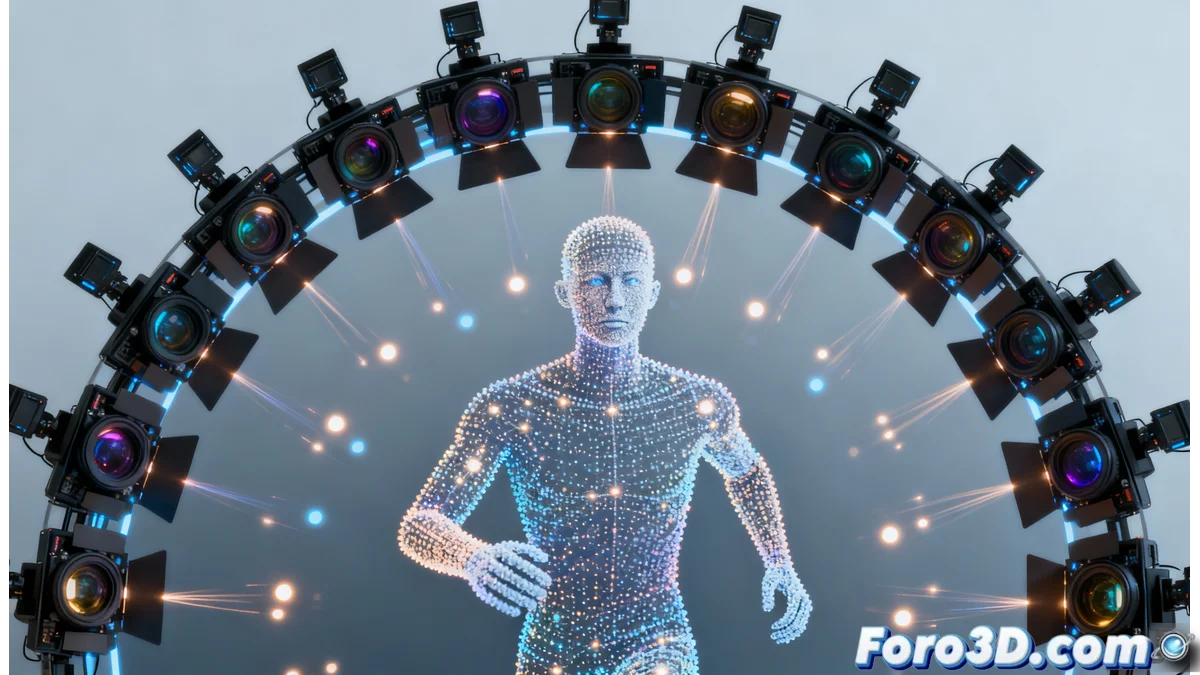

Practical Applications in Visual Production

This advancement has the potential to improve several workflows in graphics programs and engines. By generating more reliable point trajectories, it solves common problems in production.

Benefits for Creative Software:- Facilitates 3D scene reconstruction and motion capture with greater accuracy.

- Helps calibrate cameras more precisely and track points for rotoscoping or coherently integrating visual effects.

- In animation and compositing, it is useful for stabilizing multiview shots or recreating realistic trajectories for virtual cameras.

Impact and Current Limitations

Workflows in tools like Blender, Maya, Houdini, Unreal Engine, Unity, and professional tracking software can leverage this technology. Although it promises more reliable trajectories, the system still faces challenges, such as tracking elements that completely disappear behind obstacles in all available views. Its development marks a significant step toward processing multiview video more intelligently and automatically. 🚀