ManualVLA: A Framework that Enhances Robotic Planning with Multimodal Manuals

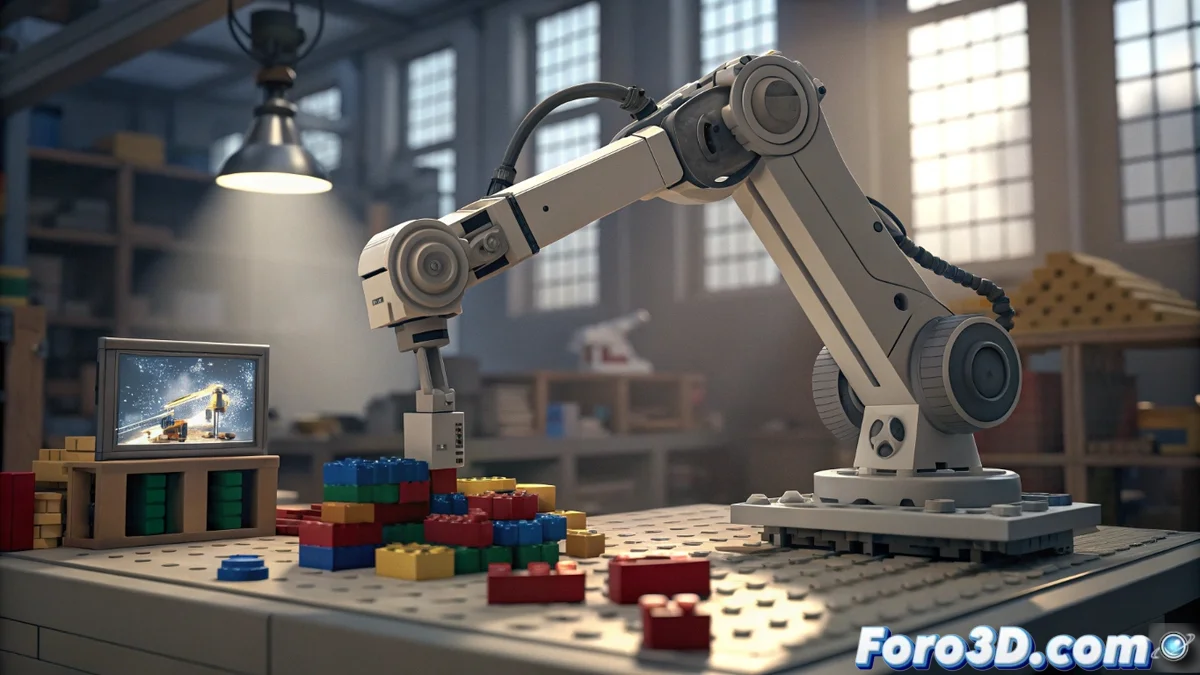

Vision-Language-Action (VLA) models stand out for their generalization capabilities, but they stumble when facing complex tasks that require a clear goal state, such as assembling structures or reorganizing objects. Their main challenge is coordinating high-level planning with precise manipulation. To address this, ManualVLA is presented, a unified framework that enables a VLA model to deduce the how process from the what outcome, transforming abstract goals into executable action sequences. 🤖

Architecture with Two Experts and Guided Reasoning

ManualVLA is based on a Mixture-of-Transformers (MoT) architecture. Instead of mapping sensory perceptions directly to motor commands, it introduces a crucial intermediate step. First, a planning expert generates intermediate multimodal manuals. These manuals integrate images, spatial indications, and text instructions. Then, a Manual Chain-of-Thought (ManualCoT) process channels these manuals to an action expert. Each manual step provides explicit control conditions, while its latent representation serves as an implicit guide for precise manipulation.

Key System Components:- Planning Expert: Generates detailed manuals that break down the final task into understandable and executable steps.

- ManualCoT: A reasoning mechanism that structures and feeds manual information to the action execution expert.

- Action Expert: Translates the multimodal manual instructions into precise and coordinated robotic movements.

ManualVLA achieves an average success rate 32% higher than the best previous hierarchical model on LEGO assembly and object reorganization tasks.

Automatically Generating Training Data

Training the planning expert requires a large amount of manual data, whose manual collection is very costly. To overcome this obstacle, the team developed a digital twin toolkit based on the 3D Gaussian Splatting technique. This toolkit automatically produces high-fidelity manual data, enabling efficient and scalable training of the planner without relying on extensive human annotations.

Advantages of the Digital Twin Toolkit:- Create realistic and varied synthetic data to train complex planning models.

- Drastically reduce the workload and cost associated with collecting real-world data.

- Allow testing and refining manuals in a simulated environment before physical execution.

Implications and Future Perspectives

This approach not only improves how robots plan and execute complex manipulation tasks, but also simplifies the process of programming them to follow instructions from scratch. By closing the gap between the final goal and the steps needed to achieve it, ManualVLA represents a significant advance toward more autonomous and capable robots. The framework establishes a new paradigm where generating clear procedures is central to achieving robust and reliable robotic manipulation. 🧩