Lancium Can Build AI Data Centers Using One Gigawatt in One Year

Michael McNamara, who leads Lancium, stated that his company has the ability to build the infrastructure for data centers necessary to sustain artificial intelligence operations that demand one gigawatt of power within a twelve-month period. This pace aligns with the urgent needs of the sector, where tech giants are pursuing scaling their capabilities at an extremely rapid rate. 🚀

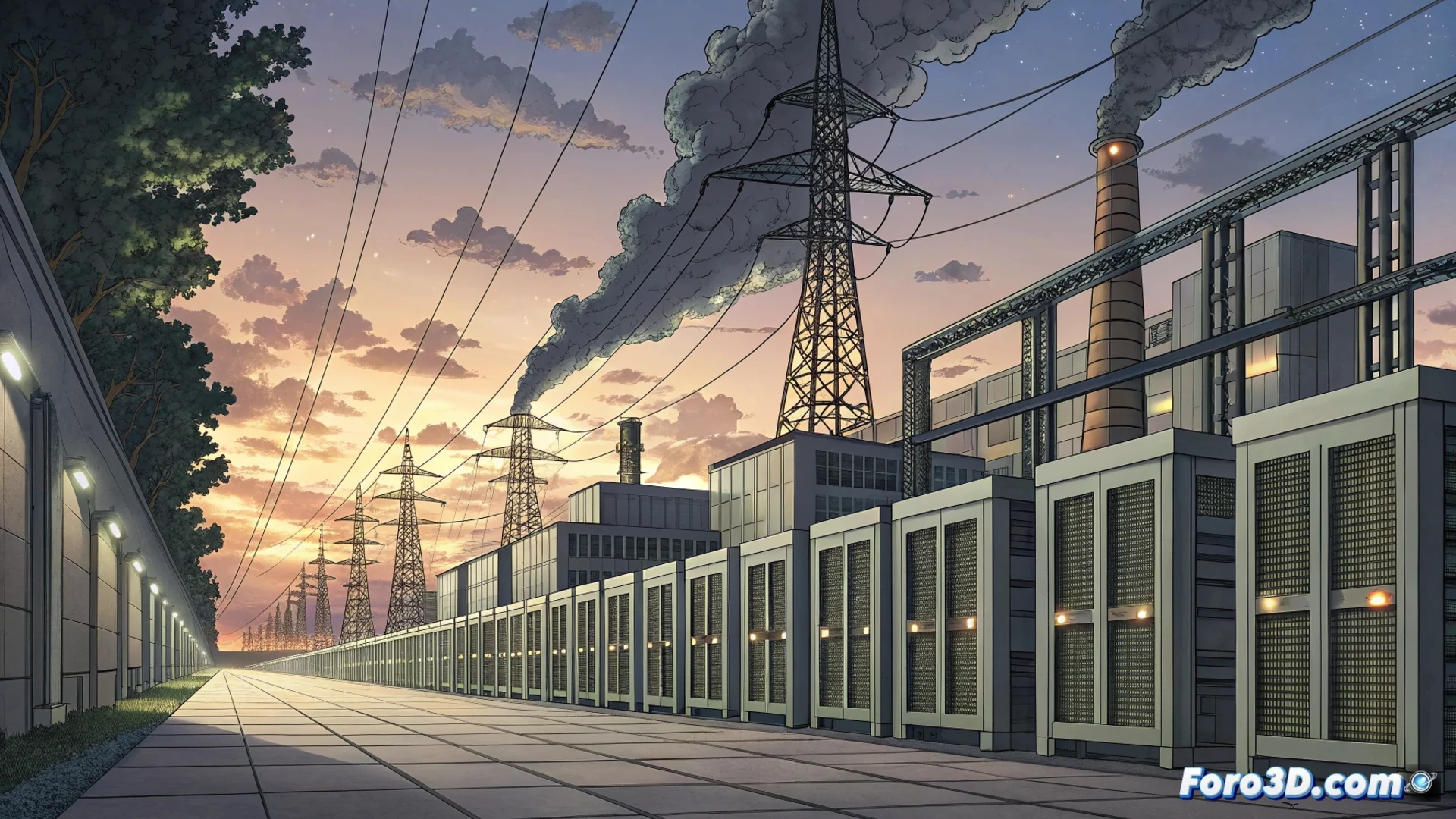

The Race for Energy to Power AI

The executive's words reflect the unprecedented pressure that the AI industry is exerting on global power grids. Creating facilities that run advanced models consumes enormous amounts of electricity, needed both to operate graphics processing units (GPUs) and to dissipate the heat they generate. This demand drives companies to seek partners capable of deploying infrastructure at high speed and in locations with access to abundant energy, ideally from renewable sources.

Exponential Growth Objectives:- Major tech companies plan to increase their consumption to reach one gigawatt every quarter.

- The next horizon involves reaching a pace of one gigawatt monthly or even in less time.

- Deployment speed becomes a critical competitive factor.

The goal of these companies is to grow to consume one gigawatt every quarter and, later on, reach a pace of one gigawatt per month or even in less time.

Modular Infrastructure as a Key Solution

The scenario of going from one gigawatt per year to one per month underscores the accelerated evolution of the sector's requirements. Companies not only need to expand their computing power but do so in a constant and predictable manner to maintain their advantage. This positions firms like Lancium, specialized in building modular data centers that are flexible, as key players in enabling this rapid expansion. ⚡

Factors Driving This Demand:- Need to process AI models that are increasingly larger and more complex.

- Search for strategic locations with access to sustainable and economical energy.

- Importance of scalable infrastructure that can be expanded quickly without major disruptions.

The Energy Future of the Tech Industry

While concerns arise about the associated carbon footprint, the industry seems primarily focused on finding the largest energy source to connect its supercomputers. The future of competitiveness in AI could depend, to a large extent, on which company or provider manages to connect the most powerful cable extension and manage this massive electrical appetite. The challenge is not only computational but deeply energetic. 🔌