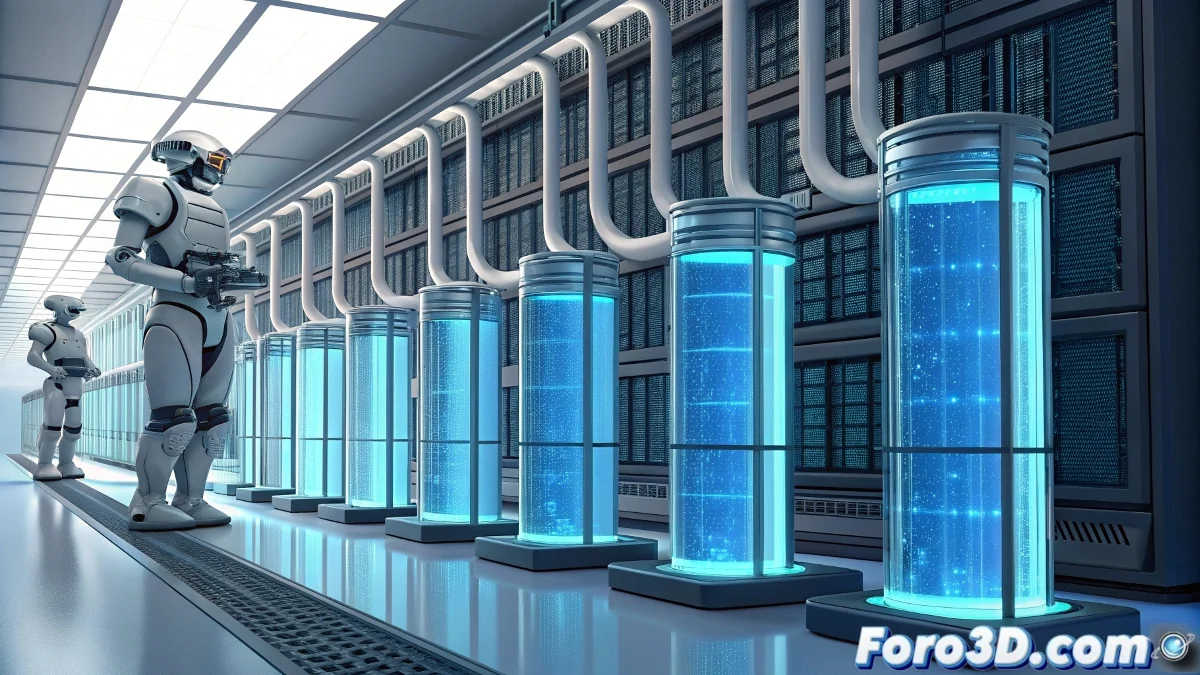

KSTAR and SuperX Revolutionize AI Infrastructure with Liquid Cooling and Radical Modularity

In a joint move that promises to transform AI data center design, KSTAR and SuperX have announced the launch of integrated solutions for direct-to-chip liquid cooling and modular servers specifically designed for the demanding requirements of accelerated computing. These innovations arrive at a critical moment where thermal throttling and energy density have become the main bottlenecks for deploying large-scale AI infrastructures. The collaboration combines KSTAR's expertise in thermal management with SuperX's modular approach to server architectures, creating a unique proposition for the market. ❄️🖥️

The Thermal Challenge of Modern AI

With latest-generation GPUs consuming up to 700W per unit and training clusters requiring power densities exceeding 50kW per rack, traditional air cooling has reached its physical limits. KSTAR and SuperX address this problem through a systemic approach that integrates cooling and computing from the chip level to the entire data center.

Direct-to-Chip Liquid Cooling (DLC)

KSTAR's solution represents a significant advance in thermal efficiency, delivering coolant directly to the most intense heat sources.

Microchannel Technology

The cold plates use computationally optimized microchannel designs that maximize heat transfer while minimizing pressure drop, allowing cooling of multiple components in the same loop including GPUs, CPUs, and HBM memory.

Advanced Dielectric Fluids

The system employs single- and two-phase fluids with superior thermal properties and zero short-circuit risk in case of leaks, with formulations that maintain stability even in continuous operation at high temperatures.

DLC Liquid Cooling Features:- 50x thermal efficiency superior to air

- Capacity to dissipate over 1kW per chip

- 90% reduction in fan energy

- 30-40°C lower junction temperatures

SuperX Modular Server Architecture

SuperX's modular servers introduce an interchangeable building blocks approach that allows adapting the infrastructure to specific workloads.

Specialized Computing Modules

Each module is optimized for a specific type of workload —large model training, high-density inference, or graphics processing— allowing mixing and matching according to changing needs.

Ultra-High-Speed Backplane

The system incorporates a backplane that supports NVLink, Infinity Fabric, and 400G Ethernet, maintaining low-latency interconnection between modules while greatly simplifying cabling.

We are redefining the economics of AI. Our solutions enable data centers to increase computational density by 3x while reducing PUE below 1.1 —something impossible with traditional cooling technologies.

Seamless Integration

The true innovation lies in how both technologies integrate perfectly to create a cohesive solution.

Intelligent Distribution Manifolds

Liquid distribution systems monitor flow, temperature, and pressure in real time, dynamically adjusting flow rates according to each module's thermal load and anticipating needs before thermal throttling occurs.

Predictive Thermal Management

AI algorithms analyze workload patterns and historical thermal profiles to preemptively optimize cooling, reducing total system energy consumption while maximizing computational performance.

Operational and Economic Advantages

The combination of liquid cooling and modularity offers tangible benefits across multiple dimensions.

TCO Reduction

Users can expect 30-40% savings in total cost of ownership thanks to lower energy consumption, higher density, and reduced physical space required.

Operational Flexibility

The modular architecture allows gradual upgrades without replacing the entire infrastructure, extending the lifespan of investments while maintaining technological competitiveness.

Improved Performance Metrics:- 95% sustained GPU utilization (vs 60-70% typical)

- 40% less time to train large models

- 60% reduction in energy per FLOP

- 3x greater density per rack footprint

Applications and Target Use Cases

The solutions are specifically designed for the most demanding challenges in accelerated computing.

Foundation Model Training

Clusters that can maintain maximum performance during weeks of continuous training without degradation due to thermal throttling, crucial for organizations developing their own large language models.

Web-Scale Inference

Systems optimized for serving thousands of inferences per second with ultra-low latency, ideal for real-time generative AI applications and personalized recommendation services.

Sustainability and Energy Efficiency

In a context of growing scrutiny over AI energy consumption, these solutions offer significant advantages.

Heat Recovery

The systems are designed to integrate with data center heat recovery systems, allowing reuse of dissipated heat for building heating or other industrial processes.

Reduced Water Usage

Unlike traditional evaporative cooling systems, direct liquid cooling operates in closed loops with minimal water consumption and no risk of legionella.

Availability and Future Roadmap

KSTAR and SuperX have announced an aggressive deployment plan for their joint solutions.

Staged Launch

The first configurations will be available for enterprise customers in Q4 2024, with expansion to cloud providers and research centers during 2025.

Innovations in Development

The roadmap includes integration with immersion cooling for components not covered by DLC, and rack-level cooling systems that completely eliminate the need for traditional CRACs.

The joint launch of KSTAR and SuperX represents a turning point in the evolution of AI infrastructure. By simultaneously addressing thermal and architectural flexibility challenges, these solutions not only solve immediate problems —they are paving the way for the next generation of data centers that will be needed to support the continuous expansion of artificial intelligence across all aspects of the digital economy. For organizations seeking to implement AI at scale, these innovations could mean the difference between leading the digital transformation or falling behind due to infrastructural limitations. 🌟🔧