Intel Designs a Massive Chiplet with HBM5 to Compete in AI

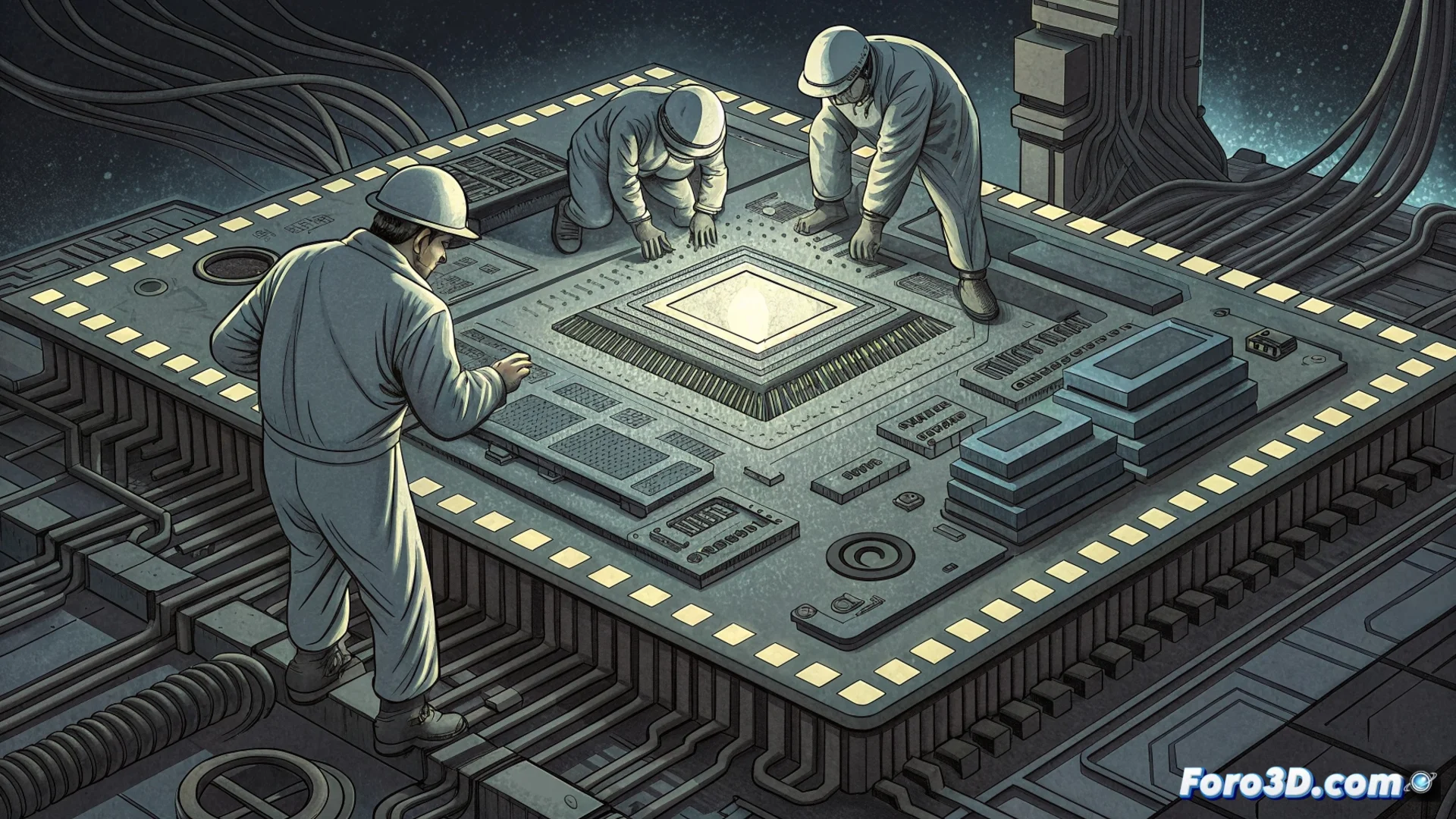

Intel is advancing in its strategy to regain ground in the lucrative field of AI accelerators. Its next move is a colossal chip that abandons the traditional monolithic design to adopt a modular chiplet architecture. This system will integrate a massive amount of compute and memory resources into a single package, targeting the demands of model training 🤖.

Power Comes from Uniting Many Fragments

The key to this project is the chiplet architecture. Instead of manufacturing a single giant piece of silicon, Intel plans to connect multiple specialized tiles or fragments on a common substrate. This method allows scaling performance more efficiently and at lower cost. The interconnection between these blocks is fundamental, and the company uses its own high-speed interconnection design to ensure data flows without bottlenecks.

Key Advantages of the Chiplet Approach:- Allows creating a more powerful chip without the difficulties of producing an extreme-sized monolith.

- Reduces manufacturing costs by improving silicon wafer yields.

- Facilitates using specialized cores for different tasks within the same package.

The race for the largest chip is no longer measured in square centimeters of silicon, but in how many 'tiles' you can integrate.

Massive Bandwidth Memory: The Fuel for AI

To power its at least sixteen processing cores, Intel's design includes an extraordinary amount of memory. It will integrate twenty-four stacks of the next-generation HBM5 within the same package. HBM (High Bandwidth Memory) is stacked vertically, offering enormous bandwidth that is critical for AI workloads. By placing the memory right next to the processor cores, latency is minimized and data flow is accelerated ⚡.

HBM5 Memory Features in This Context:- Provides the necessary bandwidth for the numerous cores to process data continuously.

- The fifth generation promises higher speed and energy efficiency than its predecessors.

- This advanced integration is ideal for handling the massive amounts of information required by modern AI models.

A Strategic Move in a Dominated Market

This development is not just a technical exercise. It represents a central pillar in Intel's strategy to compete head-on with established players like Nvidia and AMD. By creating such a powerful and specific system for AI model training, Intel seeks to demonstrate that it can surpass its own technical limits and offer a viable alternative. The ultimate goal is clear: capture a significant share of the high-performance accelerator market and regain technological leadership 🏆.