Google Expands TPU Production to Compete in AI

The launch of Gemini has focused attention on the hardware infrastructure that supports Google's artificial intelligence models. The company has relied for years on its Tensor Processing Units (TPUs), specialized chips that execute AI mathematical operations more efficiently than traditional GPUs. Now, a report projects exponential growth in their manufacturing. 🚀

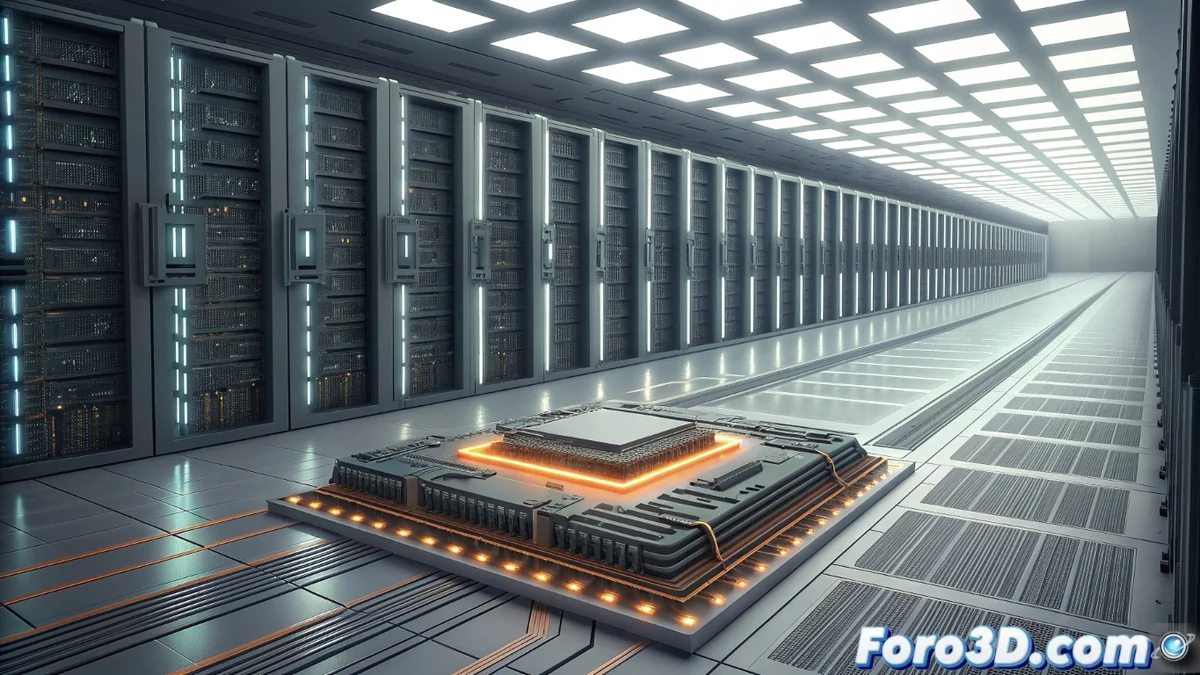

TSMC to Manufacture Millions of Accelerators for Google

According to Morgan Stanley analysts, the Taiwanese giant TSMC will produce approximately 3.2 million of these TPU chips for Google next year. This monumental figure reflects the scale of the investment Google is making to sustain and grow its AI capabilities. Producing at this volume solidifies TPUs as a central pillar of its internal infrastructure, competing head-on with solutions like those from Nvidia.

Implications of this massive production:- Establishes Google as a major player in AI hardware design, beyond software.

- Demonstrates the ability to scale a critical component internally, avoiding market bottlenecks.

- Reinforces the strategy of vertically integrating the technology stack, from the chip to the final model.

While some teams wait months to get GPUs, Google simply orders millions of its own chips.

The Strategic Advantage of In-House Hardware

Developing its own accelerators gives Google unprecedented control over the performance and cost of operating its AI models at scale. By optimizing the silicon specifically for frameworks like TensorFlow, the company seeks a decisive advantage in efficiency. This move is part of a broader trend where big tech reduces its dependence on external suppliers for critical tasks.

Key benefits of in-house TPUs:- Optimize performance: The chips are designed for Google's exact workloads, eliminating generic hardware.

- Reduce operating costs: Greater energy and computational efficiency translates to savings at data center scale.

- Mitigate shortages: Dependence on an external supplier becomes a relative issue when designing your own silicon.

A Future Defined by Specialized Silicon

Google's bet on TPUs goes beyond a simple component; it is a statement of technological sovereignty. In a landscape where the ability to process AI defines leadership, controlling the underlying hardware becomes strategic. This massive production with TSMC will not only power Gemini but will lay the foundation for the next generation of models, ensuring Google can innovate without the limitations of general-purpose chip markets. The race for AI is increasingly fought in semiconductor foundries. ⚙️