MemFlow Generates Long Videos Maintaining Visual Coherence

Creating long and coherent video sequences is a significant technical challenge. Traditional methods often use rigid strategies to compress the past, limiting their ability to reference diverse visual cues. MemFlow introduces a dynamic approach that optimizes how a model remembers and uses historical information. 🎬

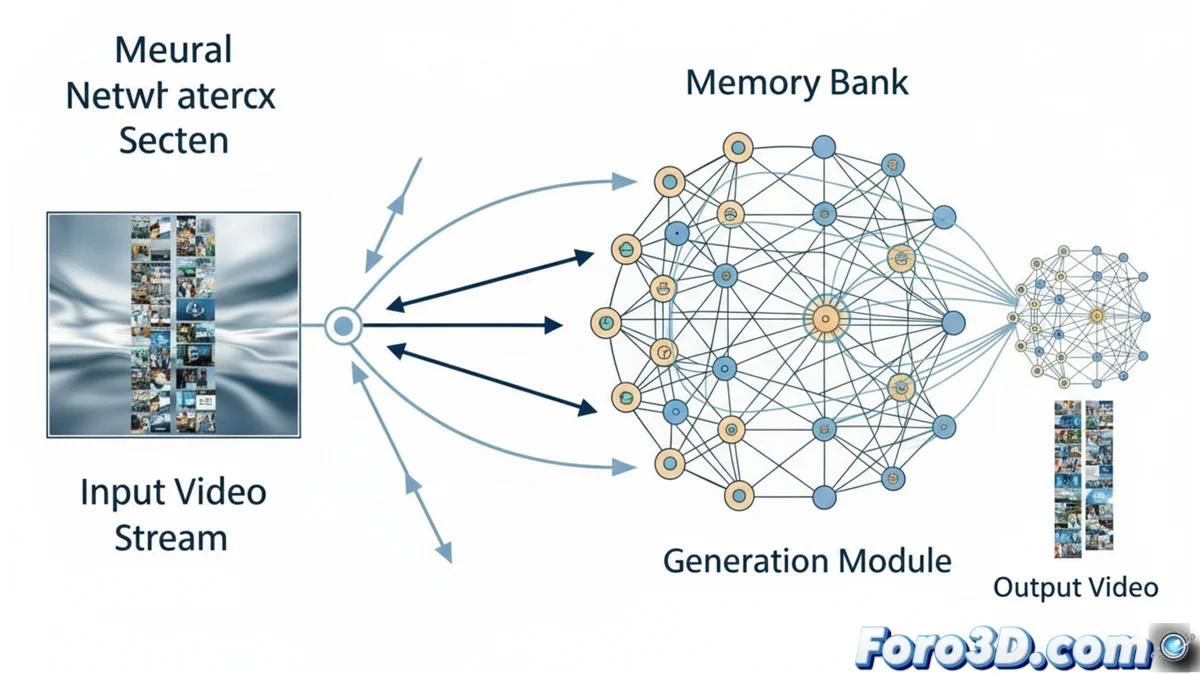

A Memory Bank that Adapts to Context

The core innovation of MemFlow is its intelligently updated memory system. Before producing a new video segment, the system analyzes the descriptive text associated with that segment. With this information, it automatically retrieves the most relevant historical frames from its database. This process not only locates the precise visual context but also enables smooth semantic transitions when new events appear or the scene changes significantly.

Key advantages of the dynamic system:- Contextual precision: Finds the past visual information it really needs, instead of relying on a fixed window.

- Smooth transitions: Maintains narrative and visual continuity even with abrupt changes in action or environment.

- Implementation flexibility: Compatible with any streaming video generation model that uses a key-value cache.

MemFlow achieves exceptional coherence in long contexts with minimal computational overhead, reducing speed by only 7.9% compared to a base model without memory.

Selective Activation for Maximum Efficiency

During the generation phase, the model must be efficient. MemFlow addresses this by activating only the necessary elements. In the model's attention layers, for each query, only the most relevant tokens stored in the memory bank are activated. This selective attention mechanism avoids processing irrelevant data, keeping the system agile.

How the efficient process works:- Targeted query: The model searches the memory only for information crucial to the current segment.

- Optimized computation: By avoiding activating the entire historical memory, processing resources are conserved.

- Coherent result: High-quality video is generated while maintaining a unified visual narrative over time.

The Future of Consistent Video Generation

MemFlow represents a practical advancement for long-duration streaming video generation. By replacing static memory methods with a dynamic, text-guided one, it solves the fundamental problem of incoherence in extended sequences. The next time a character in your generated video inexplicably changes attributes between shots, the solution might be adopting a system like this. Its design perfectly balances visual quality and operational efficiency. 🚀