A Hybrid Approach for Training Data in Autonomous Laboratories

Automation in autonomous laboratories hits a data wall. For artificial vision systems to reliably detect failures, they need vast amounts of annotated examples, a resource particularly scarce for negative events or errors. This work focuses on breaking down that barrier through an intelligent hybrid strategy that fuses the best of two worlds: the precision of the real and the abundance of the virtual. 🧪🤖

Overcoming Data Scarcity with a Dynamic Duo

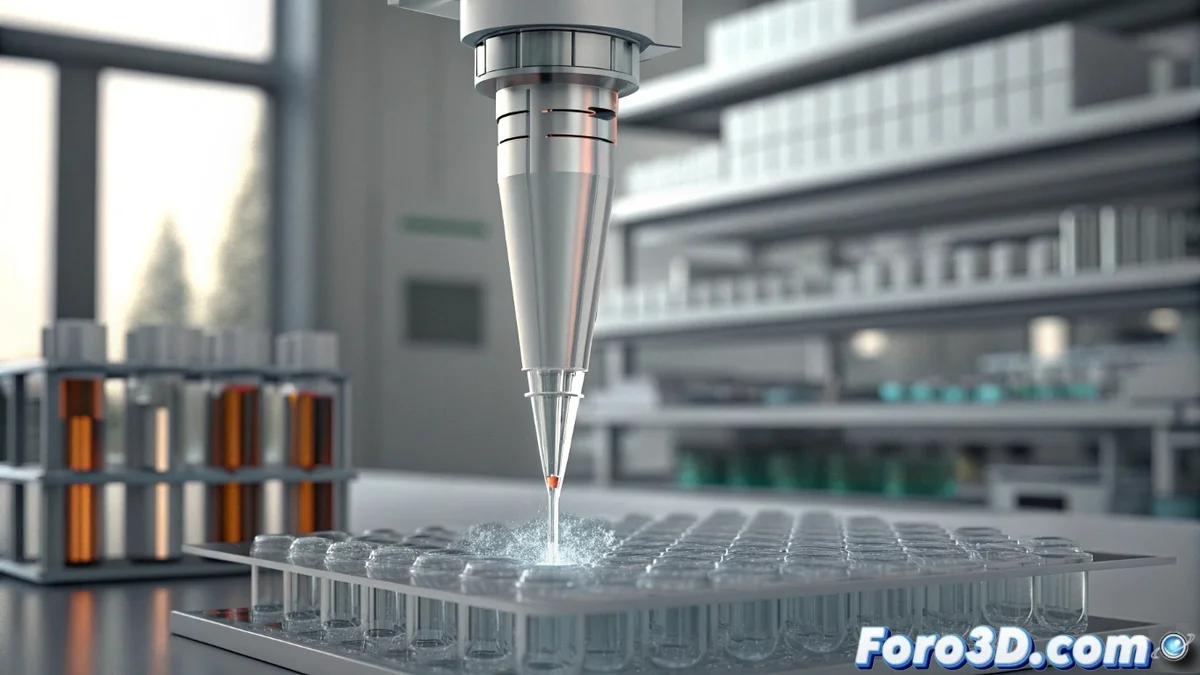

The core of the solution is a dual-path data pipeline. On one hand, real data capture is optimized through a human-in-the-loop scheme. Here, automated image acquisition is combined with selective and strategic human verification, maximizing annotation quality while minimizing operator fatigue. In parallel, a virtual generation branch creates high-fidelity synthetic images. Using advanced techniques guided by prompts and conditioned by references, this path produces a multitude of examples, including those elusive negative cases (such as the absence of bubbles or manipulation errors) that are so crucial for training.

Pillars of the hybrid pipeline:- Real acquisition with human verification: System that automatically captures images in the laboratory and subjects them to an efficient manual validation filter, ensuring an extremely precise base dataset.

- Conditioned synthetic generation: Use of generative models to create realistic images of pipetting scenarios, both successful and failed, massively expanding the dataset, especially in infrequent categories.

- Filtering and cross-validation: A critical step where generated images are evaluated and refined to ensure their utility and coherence before integration into the final training set.

"The answer to training machines with near-perfect precision is not in choosing between real and virtual, but in wisely blending them."

Results that Validate the Strategy: Precision Close to 100%

The acid test for any training method is real-world results. Applying this approach to bubble detection in pipetting—a task where a failure can compromise an entire experiment—the numbers speak for themselves. A model trained only on automated real data achieved 99.6% precision. The true milestone was achieved by incorporating synthetic data: the model trained with the hybrid mix maintained exceptional 99.4% precision. This minimal difference demonstrates that the generated data is of extraordinary quality and utility for machine learning.

Impact and applicability of the method:- Drastic reduction in manual workload: Radically decreases the time and cost associated with collecting and manually annotating large volumes of data, especially for rare events.

- Scalable and cost-effective solution: Provides a reproducible framework to feed visual feedback systems in any autonomous laboratory workflow.

- Application beyond pipetting: The strategy is directly transferable to other artificial vision challenges in science where anomaly detection or low-frequency events are critical, such as contamination identification in cultures or equipment failures.

Conclusion: The Perfect Synergy Between Human and AI

This hybrid approach marks a clear path to overcome the data bottleneck in scientific automation. It's not about replacing the researcher, but about enhancing their judgment through selective verification, and complementing reality with controlled artificial imagination to cover all scenarios. The revolution of autonomous laboratories thus advances on a more solid pillar: robust vision models, trained with abundant and diverse data, capable of discerning right from wrong with unprecedented reliability. 🔬✨