A Critical Theory of Artificial Intelligence: The Limits of Technological Progress

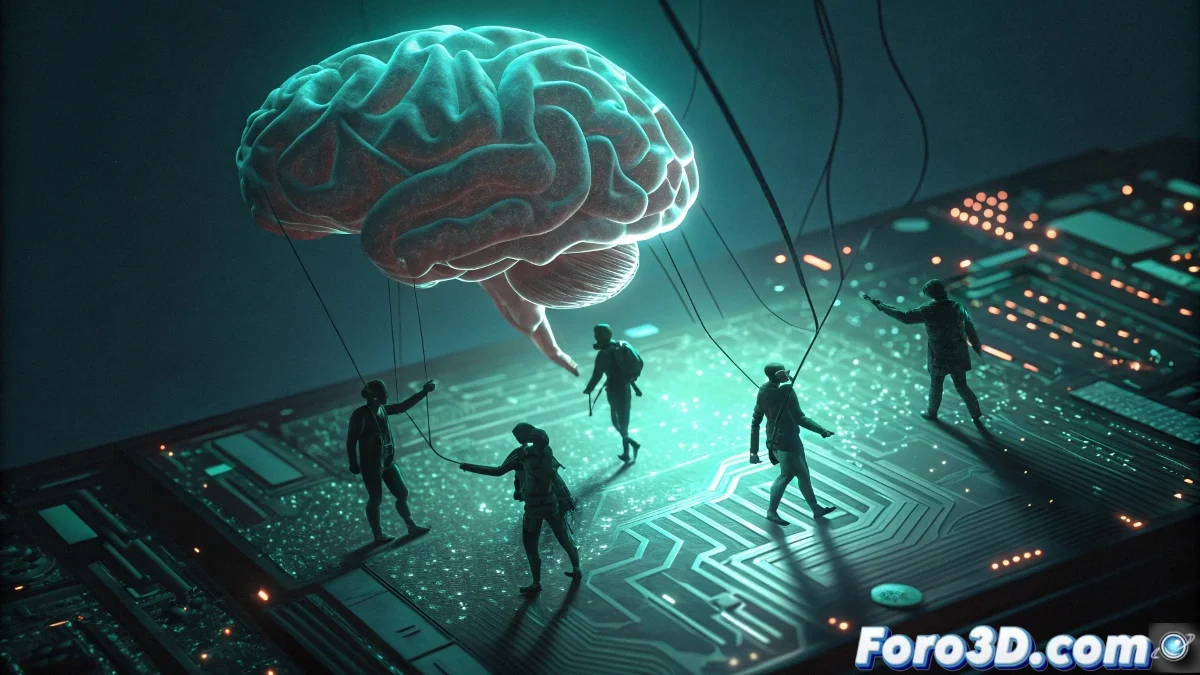

Facing the hegemonic narrative that presents AI as an inevitable and benevolent force of progress, a critical theory emerges that dismantles this narrative to reveal its fundamental contradictions. Artificial intelligence is not simply a neutral technical tool, but a political and cultural artifact that amplifies existing power dynamics, reproduces structural inequalities, and deeply transforms human relationships. This approach forces us to ask not only what AI can do, but for whom it works and what kind of society it builds. 🔍

The Failure of Technical Neutrality: Code with Embedded Ideology

The fundamental myth that critical theory dismantles is the supposed neutrality of AI systems. Every algorithm, every dataset, every optimization metric contains values and judgments embedded by its creators. When a hiring system penalizes women or facial recognition tools fail with people of color, we are not facing "technical errors" but structural prejudices encoded as computational logic. AI does not escape the society that produces it; it refracts and amplifies it. ⚖️

Dimensions of Non-Neutrality:- biases in training data that reflect historical inequalities

- Western cultural values embedded as universal

- definitions of "success" and "efficiency" loaded with ideology

- prioritization of technical solutions over structural transformations

Surveillance Capitalism: The Political Economy of AI

The current development of AI is deeply intertwined with platform capitalism, where personal data becomes raw material and behavioral predictions become the product. Companies like Google, Meta, and Amazon have built their dominance precisely through the mass extraction and processing of personal information. AI is not just another technology in this ecosystem; it is the engine of accumulation that enables converting human experience into economic value on an unprecedented scale. 📊

We do not fear conscious machines, we fear unconscious machines with power over our lives

Digital Techno-Feudalism: The New Concentration of Power

Critical theory identifies the emergence of a new technological feudalism where a few corporations control the computational infrastructure essential for social functioning. These "cloud lords" own the means of digital production in the same way medieval landowners controlled the land. The result is an unprecedented concentration of power: whoever controls the most advanced AI models controls more and more aspects of economic, social, and even political life. 🏰 Mechanisms of Power Concentration:

- monopoly on massive-scale training data

- control of critical computational infrastructures

- patents on fundamental architectures and techniques

- regulatory capture through lobbying and "revolving doors"

The Illusion of Technological Determinism: We Have Alternatives

Facing the narrative of technological determinism that presents the current development of AI as inevitable, critical theory insists that alternatives exist. We can imagine cooperative AI models, decentralized systems, developments centered on commons rather than private profit. The question is not "what will AI do to us?", but "what kind of AI do we want to build?". This perspective recovers collective human agency over the technological future. 🌱

Towards an Emancipatory AI: Principles for Another Possible Development

Critical theory does not limit itself to denunciation; it proposes concrete alternatives. Transparent and auditable AI models, developments led by affected communities, systems designed to reduce rather than amplify inequalities, legal frameworks that prioritize human rights over corporate efficiency. An emancipatory AI would be one that distributes power instead of concentrating it, that amplifies human capacities without dispossessing, that serves social justice instead of reproducing existing privileges. ✊

Principles for a Critical and Emancipatory AI:- radical transparency and external audit capability

- design centered on the most vulnerable communities

- democratic control over key infrastructures

- social impact assessment before deployment

A critical theory of artificial intelligence reminds us that technology is not destiny, but a field of political struggle. Facing techno-solutionism that promises easy answers to complex problems, this perspective insists that there are no technological shortcuts to social justice. The future of AI is not written in code, but will be the result of collective struggles over what values will guide our technological development and for whom it will work. The crucial question remains: technology for liberation or for control? ⚖️